- •Matrix computations on systems equipped with GPUs

- •Introduction

- •The evolution of hardware for High Performance Computing

- •The programmability issue on novel graphics architectures

- •About this document. Motivation and structure

- •Motivation and goals

- •Structure of the document

- •Description of the systems used in the experimental study

- •Performance metrics

- •Hardware description

- •Software description

- •The FLAME algorithmic notation

- •The architecture of modern graphics processors

- •The graphics pipeline

- •Programmable pipeline stages

- •The Nvidia G80 as an example of the CUDA architecture

- •The architecture of modern graphics processors

- •General architecture overview. Nvidia Tesla

- •Memory subsystem

- •The GPU as a part of a hybrid system

- •Arithmetic precision. Accuracy and performance

- •Present and future of GPU architectures

- •Conclusions and implications on GPU computing

- •BLAS on single-GPU architectures

- •BLAS: Basic Linear Algebra Subprograms

- •BLAS levels

- •Naming conventions

- •Storage schemes

- •BLAS on Graphics Processors: NVIDIA CUBLAS

- •Evaluation of the performance of NVIDIA CUBLAS

- •Improvements in the performance of Level-3 NVIDIA CUBLAS

- •gemm-based programming for the Level-3 BLAS

- •Systematic development and evaluation of algorithmic variants

- •Experimental results

- •Impact of the block size

- •Performance results for rectangular matrices

- •Performance results for double precision data

- •Padding

- •Conclusions

- •LAPACK-level routines on single-GPU architectures

- •LAPACK: Linear Algebra PACKage

- •LAPACK and BLAS

- •Naming conventions

- •Storage schemes and arguments

- •LAPACK routines and organization

- •Cholesky factorization

- •Scalar algorithm for the Cholesky factorization

- •Blocked algorithm for the Cholesky factorization

- •Computing the Cholesky factorization on the GPU

- •Basic implementations. Unblocked and blocked versions

- •Padding

- •Hybrid implementation

- •LU factorization

- •Scalar algorithm for the LU factorization

- •Blocked algorithm for the LU factorization

- •LU factorization with partial pivoting

- •Computing the LU factorization with partial pivoting on the GPU

- •Basic implementations. Unblocked and blocked versions

- •Padding and hybrid algorithm

- •Reduction to tridiagonal form on the graphics processor

- •The symmetric eigenvalue problem

- •Reduction to tridiagonal form. The LAPACK approach

- •Reduction to tridiagonal form. The SBR approach

- •Experimental Results

- •Conclusions

- •Matrix computations on multi-GPU systems

- •Linear algebra computation on multi-GPU systems

- •Programming model and runtime. Performance considerations

- •Programming model

- •Transfer management and spatial assignation

- •Experimental results

- •Impact of the block size

- •Number of data transfers

- •Performance and scalability

- •Impact of data distribution

- •Conclusions

- •Matrix computations on clusters of GPUs

- •Parallel computing memory architectures

- •Shared memory architectures

- •Distributed memory and hybrid architectures

- •Accelerated hybrid architectures

- •Parallel programming models. Message-passing and MPI

- •ScaLAPACK

- •PLAPACK

- •Elemental

- •Description of the PLAPACK infrastructure

- •Layered approach of PLAPACK

- •Usage of the PLAPACK infrastructure. Practical cases

- •Porting PLAPACK to clusters of GPUs

- •Experimental results

- •Conclusions

- •Conclusions

- •Conclusions and main contributions

- •Contributions for systems with one GPU

- •Contributions for clusters of GPUs

- •Related publications

- •Publications directly related with the thesis topics

- •Publications indirectly related with the thesis topics

- •Other publications

- •Open research lines

- •FLAME algorithms for the BLAS-3 routines

7.2. RELATED PUBLICATIONS

with clear indications that a better performance/power ratio can be achieved using more specialized hardware (accelerators), such as SSE-based units or GPUs, clearly deviating from the easy-to-understand shared-memory homogeneous architectures. This paper investigates if OpenMP could still survive in this new scenario and proposes a possible way to extend the current specification to reasonably integrate heterogeneity while preserving simplicity and portability. The paper leverages on a previous proposal that extended tasking with dependencies. The runtime is in charge of data movement, tasks scheduling based on these data dependencies, and the appropriate selection of the target accelerator depending on system configuration and resource availability.

AYGUAD´E, E., BADIA, R., BELLENS, P., CABRERA, D., DURAN, A., FERRER, R., GONZA- JOURNAL

˜

LEZ, M., IGUAL, F., JIMENEZ-GONZA¡LEZ, D., LABARTA, J., MARTINELL, L., MARTORELL, X.,

MAYO, R., PEREZ, J., PLANAS, J., AND QUINTANA-ORT´ı, E. Extending OpenMP to survive the heterogeneous multi-core era. International Journal of Parallel Programming 38 (2010), 440–459.

This paper advances the state-of-the-art in programming models for exploiting task-level parallelism on heterogeneous many-core systems, presenting a number of extensions to the OpenMP language inspired in the StarSs programming model. The proposed extensions allow the programmer to write portable code easily for a number of di erent platforms, relieving him/her from developing the specific code to o -load tasks to the accelerators and the synchronization of tasks. Our results obtained from the StarSs instantiations for SMPs, the Cell, and GPUs report reasonable parallel performance. However, the real impact of our approach in is the productivity gains it yields for the programmer.

Chapter 6. Matrix computations on clusters of GPUs

In [65], we introduce the porting of the PLAPACK infrastructure to clusters of GPUs:

FOGUE, M., IGUAL, F. D., QUINTANA-ORT´ı, E. S., AND VAN DE GEIJN, R. A. Retargeting

plapack to clusters with hardware accelerators. In International Conference on High Performance Computing and Simulation (HPCS 2010) (2010), pp. 444 –451.

Hardware accelerators are becoming a highly appealing approach to boost the raw performance as well as the price-performance and power-performance ratios of current clusters. In this paper we present a strategy to retarget PLAPACK, a library initially designed for clusters of nodes equipped with general-purpose processors and a single address space per node, to clusters equipped with graphics processors (GPUs). In our approach data are kept in the device memory and only retrieved to main memory when they have to be communicated to a di erent node. Here we benefit from the object-based orientation of PLAPACK which allows all communication between host and device to be embedded within a pair of routines, providing a clean abstraction that enables an e cient and direct port of all the contents of the library. Our experiments in a cluster consisting of 16 nodes with two NVIDIA Quadro FX5800 GPUs each show the performance of our approach.

CONFERENCE

PROCEEDINGS

7.2.2.Publications indirectly related with the thesis topics

Related to dense linear algebra implementations on systems with one or multiple GPUs, a parallel research has been performed regarding out-of-core computations using hardware accelerators.

209

CHAPTER 7. CONCLUSIONS

JOURNAL

JOURNAL

CONFERENCE

PROCEEDINGS

In these publications, we explore the possibility of solving large dense linear systems stored on disk, accelerating in-core calculations by using the graphics processors. Those local routines are based on the BLAS implementations proposed in Chapter 3. The work in [40] presents a MATLAB/OCTAVE interface to accelerate out-of-core computations using hardware accelerators in the framework of linear algebra. In [60] we propose a novel strategy to e ciently virtualize graphics processors on high performance clusters:

QUINTANA-ORT´ı, G., IGUAL, F., MARQU´ES, M., QUINTANA-ORT´ı, E. S., AND VAN DE GEIJN,

R. Programming OOC matrix algorithms-by-tiles on multithreaded architectures. ACM Trans. Math. Softw. (Submitted).

CASTILLO, M., IGUAL, F. D., MARQU´ES, M., MAYO, R., QUINTANA-ORT´ı, E. S., QUINTANA-

ORT´ı, G., RUBIO, R., AND VAN DE GEIJN, R. A. Out-of-core solution of linear systems on graphics processors. International Journal of Parallel, Emergent and Distributed Systems 24, 6 (2009), 521–538.

DUATO, J., IGUAL, F. D., MAYO, R., PENA˜ , A. J., QUINTANA-ORT´ı, E. S., AND SILLA, F. An e cient implementation of GPU virtualization in high performance clusters. In Euro-Par Workshops (2009), pp. 385–394.

JOURNAL

CONFERENCE

PROCEEDINGS

7.2.3.Other publications

Image processing is a discipline in which GPUs have historically delivered near-optimal performances. As an orthogonal research line, several publications in this field have been obtained during the development of this thesis. These publications are focused on a lower level approach, presenting fine-grained optimizations and ad-hoc improvements for current GPUs on biomedical image processing. We list some of the most important publications in this area:

IGUAL, F., MAYO, R., HARTLEY, T., CATALYUREK, U., RUIZ, A., AND UJALDON, M. Color

and texture analysis on emerging parallel architectures. Journal of High Performance Computing Applications (2010). (Published online).

HARTLEY, T. D., CATALYUREK, U., RUIZ, A., IGUAL, F., MAYO, R., AND UJALDON, M.

Biomedical image analysis on a cooperative cluster of gpus and multicores. In Proceedings of the 22nd annual international conference on Supercomputing (New York, NY, USA, 2008), ICS ’08, ACM, pp. 15–25.

CONFERENCE

PROCEEDINGS

IGUAL, F., MAYO, R., HARTLEY, T., CATALYUREK, U., RUIZ, A., AND UJALDON, M.

Exploring the gpu for enhancing parallelism on color and texture analysis. In From Multicores and GPUs to Petascale. 14th International Conference on Parallel Computing (ParCo 2009) (2010), vol. 19 of Advances in Parallel Computing, IOS Press, pp. 299–306.

CONFERENCE

PROCEEDINGS

IGUAL, F., MAYO, R., HARTLEY, T., CATALYUREK, U., RUIZ, A., AND UJALDON, M.

Optimizing co-occurrence matrices on graphics processors using sparse representations. In 9th International Workshop on State-of-the-Art in Scientific and Parallel Computing (PARA 2008). (To appear as Lecture Notes in Computer Science).

7.3.Software e orts and technological transfer

The insights and e orts in the framework of this thesis have been translated into software products and collaborations with companies. The software e orts include the release of libflame [149] as an open source library at the disposal of the scientific community. As of its release date, the

210

7.3. SOFTWARE EFFORTS AND TECHNOLOGICAL TRANSFER

Operation |

LAPACK |

libflame |

FLAME/C |

FLASH |

GPU |

type |

|

name |

name |

support |

support |

||||

|

|

|

|||||

Level-3 BLAS |

|

|

√ |

√ |

√ |

|

|

General matrix-matrix multiply |

?gemm |

Gemm |

sdcz |

||||

Hermitian matrix-matrix multiply |

?hemm |

Hemm |

√ |

√ |

√ |

sdcz |

|

Hermitian rank-k update |

?herk |

Herk |

√ |

√ |

√ |

sdcz |

|

Hermitian rank-2k update |

?her2k |

Her2k |

√ |

√ |

√ |

sdcz |

|

Symmetric matrix-matrix multiply |

?symm |

Symm |

√ |

√ |

√ |

sdcz |

|

Symmetric rank-k update |

?syrk |

Syrk |

√ |

√ |

√ |

sdcz |

|

Symmetric rank-2k update |

?syr2k |

Syr2k |

√ |

√ |

√ |

sdcz |

|

Triangular matrix multiply |

?trmm |

Trmm |

√ |

√ |

√ |

sdcz |

|

Triangular solve with multiple right-hand sides |

?trsm |

Trsm |

√ |

√ |

√ |

sdcz |

|

LAPACK-level |

|

|

√ |

√ |

√ |

|

|

Cholesky factorization |

?potrf |

Chol |

sdcz |

||||

LU factorization without pivoting |

N/A |

LU nopiv |

√ |

√ |

√ |

sdcz |

|

LU factorization with partial pivoting |

?getrf |

LU piv |

√ |

√ |

√ |

sdcz |

|

LU factorization with incremental pivoting |

N/A |

LU incpiv |

√ |

√ |

|

sdcz |

|

QR factorization (via UT Householder transforms) |

?geqrf |

QR UT |

√ |

|

sdcz |

||

QR factorization (via incremental UT Householder trans.) |

N/A |

QR UT inc |

√ |

|

sdcz |

||

LQ factorization (via UT Householder transforms) |

?gelqf |

LQ UT |

|

|

sdcz |

||

Up-and-Downdate Cholesky/QR factor |

N/A |

UDdate UT |

√ |

|

|

sdcz |

|

Up-and-Downdate Cholesky/QR factor |

N/A |

UDdate UT inc |

|

√ |

|

sdcz |

|

(via incremental UT Householder-like transforms) |

|

|

√ |

√ |

√ |

|

|

Triangular matrix inversion |

?trtri |

Trinv |

sdcz |

||||

Triangular transpose matrix multiply |

?lauum |

Ttmm |

√ |

√ |

√ |

sdcz |

|

Symmetric/Hermitian positive definite inversion |

?potri |

SPDinv |

√ |

√ |

√ |

sdcz |

|

Triangular Sylvester equation solve |

?trsyl |

Sylv |

√ |

√ |

√ |

sdcz |

|

Reduction from a symmetric/Hermitian definite generalized |

[sc]sygst |

Eig gest |

√ |

√ |

√ |

sdcz |

|

eigenproblem to standard form |

[cz]hegst |

|

√ |

|

|

|

|

Reduction to upper Hessenberg form |

?gehrd |

Hess UT |

|

|

sdcz |

||

Reduction to tridiagonal form |

[sd]sytrd |

Tridiag UT |

√ |

|

|

sdcz |

|

|

[cz]hetrd |

|

√ |

|

|

|

|

Reduction to bidiagonal form |

?gebrd |

Bidiag UT |

|

|

sdcz |

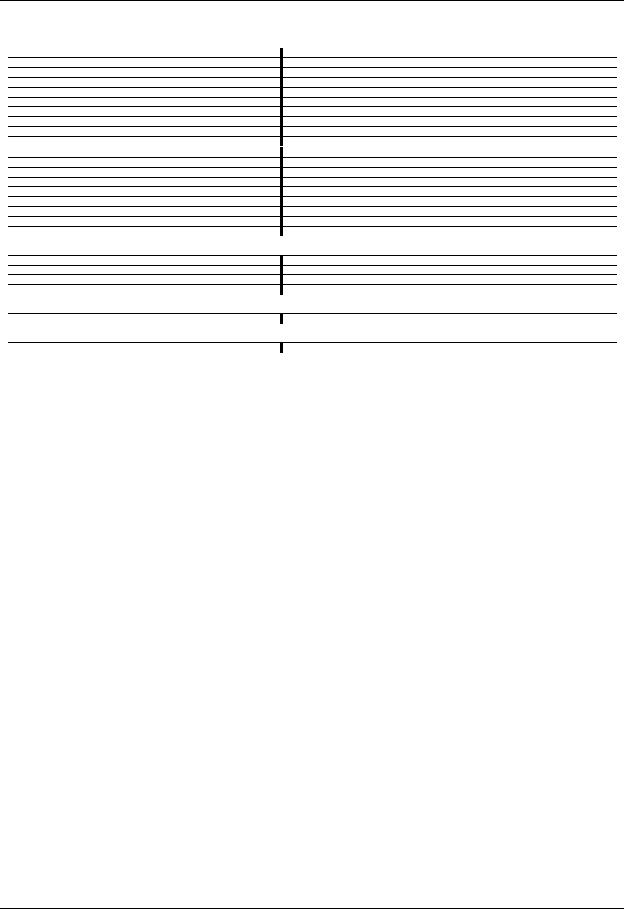

Table 7.1: Dense linear algebra operations supported by libflame, which has full support for all four of the floating point data types: single and double precision real, single and double precision complex; ? expands to one of {sdcz}.

library was the only dense linear algebra software product with multi-GPU capabilities and a wide functionality. It implements a large subset of the LAPACK functionality and a major part of the techinques illustrated in Chapter 5. A detailed description of the functionalities of the libflame library can be found in Table 7.1.

The interest on this type of run-time systems has been translated into collaborations and awards from well-known companies:

MICROSOFT RESEARCH showed their interest in the three main research lines presented in this thesis (developments for single-GPU, multi-GPU and clusters of GPUs). As part of the agreement with the company, codes for BLAS on one and multiple GPUs, LAPACK-level approaches on one and multiple GPUs, and similar routines for distributed memory approaches will be integrated in libflame with the support of MICROSOFT. A commercial license of the library has been acquired by MICROSOFT as part of the agreement.

NVIDIA granted the HPC&A group with the NVIDIA Professor Partnership Award 2008 for its work on multi-GPU systems. At the same time, many of the graphics hardware used for performance evaluation in this document have been generously donated by the company in the framework of this collaboration.

PETAPATH, manufacturers of ClearSpeed boards [50] signed an agreement with the HPC&A group to demonstrate the adaptability of the multi-GPU system developed to heterogeneous systems with other type of hardware accelerators. A prototype of the GPUSs runtime system was also developed and tested on this type of platforms.

211

CHAPTER 7. CONCLUSIONS

7.4.Open research lines

GPU Computing is a relatively novel discipline, and thus many research lines remain open after the conclusion of this thesis. Some of them can be adapted from existing ideas from other arenas; others are new; it is likely that the last group of ideas will evolve with graphic architectures and programming paradigms.

The following list details some of the open research lines related to this thesis:

The NVIDIA CUBLAS versions used for the evaluation and development of the ideas in this thesis do not support the overlapping of calculations on the GPU and data transfers. With the advent of newer versions that support this feature, the introduction of overlapping techniques on both single-GPU, multi-GPU and clusters of GPUs will open a new research line in order to hide the bus latency. More specifically, the runtime-based approach for systems with multiple GPUs will require a full redesign in order to deal and exploit this overlapping capabilities.

Although the port of PLAPACK to clusters of GPUs combines a better programmability for message-passing architectures and remarkable performance, will soon be replaced by Elemental [113]. Fortunately, many of the design decisions in the Elemental framework are similar to those adopted in the early development of PLAPACK. A port of the Elemental framework is also in mind to adapt it to clusters of GPUs.

In the adaptation of message-passing libraries, inter-node parallelism and data transfer reduction between processes is accomplished by an appropriate algorithm choose. In the case described in this thesis, one process per GPU is spawn. However, when more than one GPU per node is available, data transfers between memory spaces can be redundant using this approach. An alternative approach would be to employ one process per node, relying the management of multiple GPUs inside the node to a run-time system as that described in Chapter 5.

The improvements described in Chapter 5 pursue the goal of data transfer reduction, without taking into account the scheduling policies to accomplish it. An alternative, but compatible approach is based in the modification of scheduling policies in order to assign tasks to the most a ne computing resource, using a technique usually referred as cache a nity [44]. This techniques have already been implemented in the public release of libflame, but further research is still in the roadmap.

While GPUs o er a near-optimal GFLOPS/price ratio, the main disadvantage of this hardware is power consumption. Energy-aware GPU computing is a field to be explored in the near future. Run-time systems provide a powerful tool to monitor and manage the configuration of the di erent computing units (in this case GPUs) according to their execution status, or the ability to redesign the scheduling policies in order to take into account the power consumption issue.

Other improvements and research lines will be ultimately dictated by the technological evolution of graphics hardware. To name three possible improvement scenarios related to each one of the parts of this thesis:

•Simultaneous execution of kernels can boost performance of block-oriented BLAS-3 algorithms by executing, if possible, the operations on the blocks simultaneously on a single-GPU system. This feature is already included in the latest NVIDIA GPUs.

212

7.4.OPEN RESEARCH LINES

•An scenario where direct GPU-GPU communication on multi-GPU systems is possible in the near future. In this case, provided the PCIExpress bus would disappear as the main bottleneck in the system, other strategies can be considered to improve performance. More intelligent scheduling policies, in which tasks are mapped to the most a ne accelerator (considering which data are necessary for the execution of the task and where those data are located) have already been investigated in the framework of the libflame development [42].

•Future technological improvements include direct communication between GPU memories via interconnection networks (namely Infiniband). Adapting those new technologies to our developments would yield higher performance at no cost from the programmability level.

•Current hardware trends include the integration of the GPU as an on-chip co-processor to the general-purpose unit. NVIDIA has recently revealed the integration of ARM processors and graphics processors, and AMD has developed similar products in the framework of the FUSION project. If this novel architectures are sucessful, many of the techniques and methodologies proposed in this thesis are likely to need further adaptation to them. However, we believe that many of the ideas and techniques investigated would have a relevant impact on the performance of dense linear algebra implementations on these novel architectures without dramatic conceptual modifications.

213

CHAPTER 7. CONCLUSIONS

214