- •Matrix computations on systems equipped with GPUs

- •Introduction

- •The evolution of hardware for High Performance Computing

- •The programmability issue on novel graphics architectures

- •About this document. Motivation and structure

- •Motivation and goals

- •Structure of the document

- •Description of the systems used in the experimental study

- •Performance metrics

- •Hardware description

- •Software description

- •The FLAME algorithmic notation

- •The architecture of modern graphics processors

- •The graphics pipeline

- •Programmable pipeline stages

- •The Nvidia G80 as an example of the CUDA architecture

- •The architecture of modern graphics processors

- •General architecture overview. Nvidia Tesla

- •Memory subsystem

- •The GPU as a part of a hybrid system

- •Arithmetic precision. Accuracy and performance

- •Present and future of GPU architectures

- •Conclusions and implications on GPU computing

- •BLAS on single-GPU architectures

- •BLAS: Basic Linear Algebra Subprograms

- •BLAS levels

- •Naming conventions

- •Storage schemes

- •BLAS on Graphics Processors: NVIDIA CUBLAS

- •Evaluation of the performance of NVIDIA CUBLAS

- •Improvements in the performance of Level-3 NVIDIA CUBLAS

- •gemm-based programming for the Level-3 BLAS

- •Systematic development and evaluation of algorithmic variants

- •Experimental results

- •Impact of the block size

- •Performance results for rectangular matrices

- •Performance results for double precision data

- •Padding

- •Conclusions

- •LAPACK-level routines on single-GPU architectures

- •LAPACK: Linear Algebra PACKage

- •LAPACK and BLAS

- •Naming conventions

- •Storage schemes and arguments

- •LAPACK routines and organization

- •Cholesky factorization

- •Scalar algorithm for the Cholesky factorization

- •Blocked algorithm for the Cholesky factorization

- •Computing the Cholesky factorization on the GPU

- •Basic implementations. Unblocked and blocked versions

- •Padding

- •Hybrid implementation

- •LU factorization

- •Scalar algorithm for the LU factorization

- •Blocked algorithm for the LU factorization

- •LU factorization with partial pivoting

- •Computing the LU factorization with partial pivoting on the GPU

- •Basic implementations. Unblocked and blocked versions

- •Padding and hybrid algorithm

- •Reduction to tridiagonal form on the graphics processor

- •The symmetric eigenvalue problem

- •Reduction to tridiagonal form. The LAPACK approach

- •Reduction to tridiagonal form. The SBR approach

- •Experimental Results

- •Conclusions

- •Matrix computations on multi-GPU systems

- •Linear algebra computation on multi-GPU systems

- •Programming model and runtime. Performance considerations

- •Programming model

- •Transfer management and spatial assignation

- •Experimental results

- •Impact of the block size

- •Number of data transfers

- •Performance and scalability

- •Impact of data distribution

- •Conclusions

- •Matrix computations on clusters of GPUs

- •Parallel computing memory architectures

- •Shared memory architectures

- •Distributed memory and hybrid architectures

- •Accelerated hybrid architectures

- •Parallel programming models. Message-passing and MPI

- •ScaLAPACK

- •PLAPACK

- •Elemental

- •Description of the PLAPACK infrastructure

- •Layered approach of PLAPACK

- •Usage of the PLAPACK infrastructure. Practical cases

- •Porting PLAPACK to clusters of GPUs

- •Experimental results

- •Conclusions

- •Conclusions

- •Conclusions and main contributions

- •Contributions for systems with one GPU

- •Contributions for clusters of GPUs

- •Related publications

- •Publications directly related with the thesis topics

- •Publications indirectly related with the thesis topics

- •Other publications

- •Open research lines

- •FLAME algorithms for the BLAS-3 routines

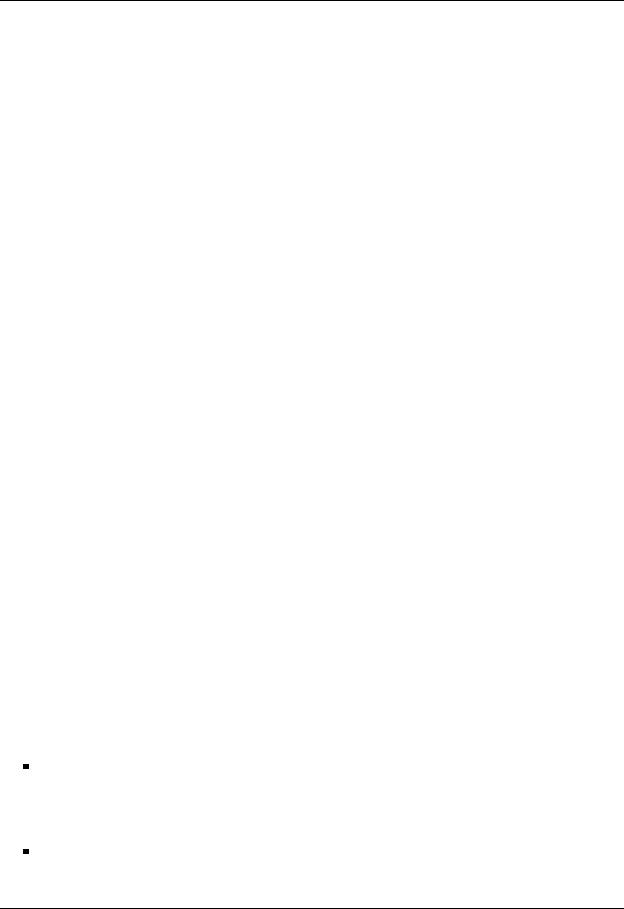

5.4. EXPERIMENTAL RESULTS

|

1 |

2 |

3 |

4 |

Techniques |

- |

Data-a nity |

Data-a nity |

Data-a nity |

|

|

|

|

|

|

- |

Own/alien blocks |

Own/alien blocks |

Own/alien blocks |

|

- |

Write-through |

Write-through |

Write-back |

|

|

|

|

|

|

|

|

|

|

Software cache |

- |

- |

Alien blocks cached |

Alien blocks cached |

|

- |

- |

Write-invalidate |

Write-invalidate |

|

|

|

|

|

|

|

|

|

|

Benefits |

- |

Reduce number of writes |

Reduce number of writes |

Reduce number of reads |

|

|

to GPU (own blocks) |

to GPU (alien blocks) |

from GPU |

Table 5.6: Summary of the techniques and benefits introduced by the successive improvements in the runtime.

Version 2: Static data layout combined with write-through. The number of writes of own blocks is reduced as own blocks already transferred to a GPU are not transferred there again by future instructions.

Version 3: Software-cache of alien blocks. The number of writes of alien blocks is reduced by keeping them in a cache managed in software.

Version 4: Software-cache of alien blocks with write-back. The number of reads of own blocks is reduced by only maintaining the coherence between main memory and GPU memories when it is strictly necessary.

As an additional advantage, the improvements introduced in the runtime and the design of the runtime itself are independent from the underlying architecture. Assume a system consisting of a machine with a central memory pool with multiple hardware accelerators connected to it, each one with an independent memory space. With this setup, the techniques exposed above and their benefits can implemented with minor modifications.

5.4.Experimental results

5.4.1.Impact of the block size

The block size is a critical factor to attain high performance in algorithms-by-blocks. Independently of the techniques used to reduce data transfers, to improve data a nity, etc. an incorrect election of this parameter lead to sub-optimal performance. The first set of experiments aims at determining the optimal value for the block size, as well as its e ect on the final performance of the parallel implementation.

In our case, the optimal value for the block size is a trade-o between a number of factors:

Potential parallelism: a smaller block size is translated into a finer granularity. A larger block dimension leads to less concurrency. Given the pool of execution units available in the system, the size of the block greatly influences the degree of parallel execution on the sub-problems, and reduces idle times.

Data transfer e ciency: a small block size is translated into a larger number of data transfers of reduced dimension. The latency of the PCI-Express bus plays a critical role in this case, and data transfers of small blocks have a significant performance penalty. Conversely, a

147

CHAPTER 5. MATRIX COMPUTATIONS ON MULTI-GPU SYSTEMS

larger block dimension translates into higher e ective bandwidth, and thus benefits the final performance of the implementation.

Inner BLAS kernels performance: as shown in Chapter 3, the BLAS implementations on the GPU deliver higher performances when operating with relatively large matrices, as those derived from the utilization of a big block size.

Taking into account the combination of these factors, the performance of parallel implementations will be a ected by the chosen block size from two perspectives:

1.If the block size is too small, data transfers become a serious bottleneck in the computation, and BLAS executions are ine cient on the GPU.

2.If the block size is too large, the degree of concurrency decreases and resources are wasted. In addition, data transfers through the bus are e cient, and so are BLAS executions.

In summary, a thorough analysis of the performance of the runtime system for a given linear algebra algorithm-by-blocks should show an increasing performance as the block size is increased (and thus, BLAS executions and data transfers are more e cient), attaining a peak point as potential parallelism in the algorithm reaches the optimal point. From this dimension on, parallelism decreases, and thus parallel e ciency should drop.

Consider for example the performance results in Figure 5.7. The figure shows the performance of the right-looking variant of the Cholesky factorization in GFLOPS (Y axis) for the most tuned version of the runtime implementation for several matrix sizes (Z axis), with block size (X axis) increasing from a relatively small size (b = 128) to a larger one (b = 1600). In this example, the four GPUs available on TESLA2 were used, following a 2-D data layout. Similar results have been observed for the rest of the implementations of the runtime and data a nity options. The figure o ers information on the optimal block size for each matrix size.

As predicted, the behavior of the performance curves for each matrix size is quite regular and predictable. In each case, performance is increased as the block size grows until it reaches an optimal value. From this point on, a drop in the performance rate occurs. These results demonstrate the assumptions made on the BLAS and data transfer performance, and potential parallelism. In fact, note how the optimal block size (shown in the figure as a green line) is also increased as matrix size grows. Thus, taking into account only the potential parallelism factor, the optimal block size partially depends on the total matrix dimension.

For the rest of the experimental results in this chapter, performance values for the optimal block size are shown. A complete analysis of the performance attained using a wide range of block sizes has been carried out for each operation and runtime version.

5.4.2.Number of data transfers

Once the optimal block size is found and fixed for a given matrix size, the benefits of the di erent implementations of the runtime are mostly dictated by the number of data transfers necessary to perform a given operation. In fact, the reduction in the number of data transfers is the main goal of the successive improvements in the runtime implementation.

To demonstrate the benefits of the incremental improvements introduced from a basic runtime version, the next experiment evaluates the performance of the implementation of the algorithm- by-blocks for the Cholesky factorization (right-looking variant) on the four GPUs of TESLA2, for a fixed block size (b = 768), using the four versions of the runtime, and a 2-D data layout when

148

5.4. EXPERIMENTAL RESULTS

Cholesky performance varying the block size on TESLA2

GFLOPS

750

600

450

300

150

0 |

|

20000 |

|

16000 |

|

Matrix |

12000 |

8000 |

|

|

size |

4000

Optimal block size

Optimal block size

|

|

1152 |

1408 |

|

640 |

896 |

|

|

size |

|

|

0 |

384 |

Block |

|

128 |

|

|

Figure 5.7: Performance of the Cholesky factorization using the 4 GPUs in TESLA2, varying the block size. Runtime version 4.

Number of transfers

Number of transfers to GPU (Cholesky fact.)

|

|

Number of transfers to GPU memory - Version 1 |

|

|||||||

10000 |

|

Number of transfers to GPU memory - Version 2 |

|

|||||||

|

Number of transfers to GPU memory - Version 3 |

|

||||||||

|

|

|

||||||||

|

|

Number of transfers to GPU memory - Version 4 |

|

|||||||

8000 |

|

|

|

|

|

|

|

|

|

|

6000 |

|

|

|

|

|

|

|

|

|

|

4000 |

|

|

|

|

|

|

|

|

|

|

2000 |

|

|

|

|

|

|

|

|

|

|

0 |

|

|

|

|

|

|

|

|

|

|

0 |

2000 |

4000 |

6000 |

8000 |

10000 |

12000 |

14000 |

16000 |

18000 |

20000 |

Matrix size

Number of transfers

Number of transfers to main memory (Cholesky fact.)

|

|

Number of transfers to main memory - Version 1 |

|

|||||||

3500 |

|

Number of transfers to main memory - Version 2 |

|

|||||||

|

Number of transfers to main memory - Version 3 |

|

||||||||

|

|

Number of transfers to main memory - Version 4 |

|

|||||||

3000 |

|

|

|

|

|

|

|

|

|

|

2500 |

|

|

|

|

|

|

|

|

|

|

2000 |

|

|

|

|

|

|

|

|

|

|

1500 |

|

|

|

|

|

|

|

|

|

|

1000 |

|

|

|

|

|

|

|

|

|

|

500 |

|

|

|

|

|

|

|

|

|

|

0 |

|

|

|

|

|

|

|

|

|

|

0 |

2000 |

4000 |

6000 |

8000 |

10000 |

12000 |

14000 |

16000 |

18000 |

20000 |

Matrix size

Figure 5.8: Number of data transfers for the Cholesky factorization using 4 GPUs on TESLA2. Block size is b = 768.

149

CHAPTER 5. MATRIX COMPUTATIONS ON MULTI-GPU SYSTEMS

appropriate (versions 2 to 4). In this case, instead of raw performance attained by each one version, we analyze the number of data transfers to and from the GPU memory. We consider the number of writes (transfers to GPU memory) and reads (transfers to main memory) for each runtime version. Thus, the aim of the experiment is to evaluate the impact on the number of data transfers, and to demonstrate how each improvement in the runtime a ects the writes or the reads of data (or even both).

Figure 5.8 reports the amount of data transfers for the experiment described above. The lefthand side plot shows the amount of writes for each implementation of the runtime; the plot on the right-hand side shows the corresponding number of reads.

Let us focus first on the left plot. The number of writes is dramatically reduced by the application of two di erent techniques:

Data-a nity and the concept of own and alien blocks in version 2 of the runtime greatly reduces the amount of necessary data transfers from main memory to GPU memory for those blocks considered as property of the assigned GPU. As an example, consider the reduction in the number of data writes for a matrix size of n = 20,480. In this case, data transfers from main to GPU memory are reduced from 10,179 in the basic runtime implementation to 4,590 in the implementation introducing data-a nity, saving thus more than half of the total data transfers.

The usage of a cache of alien blocks in the GPU further decreases the number of data writes, as non-proprietary blocks that are read once by a given GPU do not have to be transferred again if used in a future instruction (unless they are invalidated by the proprietary of the block). This improvement is shared by versions 3 and 4 of the runtime, as the way caches work in both versions is common from the point of view of the data writes. For a matrix size n = 20,480, the number of writes is reduced from 10,179 to 1,106.

To sum up, the introduction of data-a nity and a software cache can reduce the amount of transfers from main memory to GPU memory in a factor 9× for the tested matrix sizes.

An inspection of data reads reveals that the benefits are similar. In this case, no transfer reduction is introduced in the three first versions of the runtime. However, the introduction of write-back as the coherency policy between main memory and GPU memory in version 4 reduces the amount of reads dramatically. For instance, for a matrix size n = 20,480, the amount of reads from main to GPU memory is reduced from 3,627 in versions 1-3 to 377 in version 4 of the runtime.

5.4.3.Performance and scalability

The progressive reduction of the amount of data transfers derived from the improvements introduced in the run-time system yields direct gains in the performance of the implemented linear algebra routines. To evaluate how this reduction impacts the final performance of the parallel executions, we show the rates attained for the Cholesky factorization using the four GPUs of TESLA2. For this experiment, all BLAS routines are mapped to NVIDIA CUBLAS calls. The factorization of the diagonal blocks is performed in the multi-core processor, following the hybrid approach introduced in Chapter 4, as the optimal block size is small and this particular operation is more suited for this type of processor.

We propose an evaluation of the performance and scalability of the three algorithmic variants for the Cholesky factorization analogous to those shown in Chapter 4 (see Figure 4.1). We evaluate how the successive improvements introduced in the transfers management a ect the performance of each algorithmic variant.

150