- •Matrix computations on systems equipped with GPUs

- •Introduction

- •The evolution of hardware for High Performance Computing

- •The programmability issue on novel graphics architectures

- •About this document. Motivation and structure

- •Motivation and goals

- •Structure of the document

- •Description of the systems used in the experimental study

- •Performance metrics

- •Hardware description

- •Software description

- •The FLAME algorithmic notation

- •The architecture of modern graphics processors

- •The graphics pipeline

- •Programmable pipeline stages

- •The Nvidia G80 as an example of the CUDA architecture

- •The architecture of modern graphics processors

- •General architecture overview. Nvidia Tesla

- •Memory subsystem

- •The GPU as a part of a hybrid system

- •Arithmetic precision. Accuracy and performance

- •Present and future of GPU architectures

- •Conclusions and implications on GPU computing

- •BLAS on single-GPU architectures

- •BLAS: Basic Linear Algebra Subprograms

- •BLAS levels

- •Naming conventions

- •Storage schemes

- •BLAS on Graphics Processors: NVIDIA CUBLAS

- •Evaluation of the performance of NVIDIA CUBLAS

- •Improvements in the performance of Level-3 NVIDIA CUBLAS

- •gemm-based programming for the Level-3 BLAS

- •Systematic development and evaluation of algorithmic variants

- •Experimental results

- •Impact of the block size

- •Performance results for rectangular matrices

- •Performance results for double precision data

- •Padding

- •Conclusions

- •LAPACK-level routines on single-GPU architectures

- •LAPACK: Linear Algebra PACKage

- •LAPACK and BLAS

- •Naming conventions

- •Storage schemes and arguments

- •LAPACK routines and organization

- •Cholesky factorization

- •Scalar algorithm for the Cholesky factorization

- •Blocked algorithm for the Cholesky factorization

- •Computing the Cholesky factorization on the GPU

- •Basic implementations. Unblocked and blocked versions

- •Padding

- •Hybrid implementation

- •LU factorization

- •Scalar algorithm for the LU factorization

- •Blocked algorithm for the LU factorization

- •LU factorization with partial pivoting

- •Computing the LU factorization with partial pivoting on the GPU

- •Basic implementations. Unblocked and blocked versions

- •Padding and hybrid algorithm

- •Reduction to tridiagonal form on the graphics processor

- •The symmetric eigenvalue problem

- •Reduction to tridiagonal form. The LAPACK approach

- •Reduction to tridiagonal form. The SBR approach

- •Experimental Results

- •Conclusions

- •Matrix computations on multi-GPU systems

- •Linear algebra computation on multi-GPU systems

- •Programming model and runtime. Performance considerations

- •Programming model

- •Transfer management and spatial assignation

- •Experimental results

- •Impact of the block size

- •Number of data transfers

- •Performance and scalability

- •Impact of data distribution

- •Conclusions

- •Matrix computations on clusters of GPUs

- •Parallel computing memory architectures

- •Shared memory architectures

- •Distributed memory and hybrid architectures

- •Accelerated hybrid architectures

- •Parallel programming models. Message-passing and MPI

- •ScaLAPACK

- •PLAPACK

- •Elemental

- •Description of the PLAPACK infrastructure

- •Layered approach of PLAPACK

- •Usage of the PLAPACK infrastructure. Practical cases

- •Porting PLAPACK to clusters of GPUs

- •Experimental results

- •Conclusions

- •Conclusions

- •Conclusions and main contributions

- •Contributions for systems with one GPU

- •Contributions for clusters of GPUs

- •Related publications

- •Publications directly related with the thesis topics

- •Publications indirectly related with the thesis topics

- •Other publications

- •Open research lines

- •FLAME algorithms for the BLAS-3 routines

4.2. CHOLESKY FACTORIZATION

4.2.Cholesky factorization

One of the most common strategies for the solution of linear equation systems commences with the factorization of the coe cient matrix, decomposing it as the product of two triangular matrices. These factors are used in a subsequent stage to obtain the solution of the original system by solving two triangular systems. The LU and the Cholesky factorization are decompositions of this type.

The LU factorization, combined with a partial row pivoting during the factorization process, is the common method to solve general linear systems of the type Ax = b. The Cholesky factorization plays the same role when the coe cient matrix A is symmetric and positive definite.

Definition A symmetric matrix A Rn×n is positive definite if xT Ax > 0 for all nonzero x Rn.

The following definition establishes the necessary conditions for a matrix to be invertible, a necessary property for the calculation of both the Cholesky and the LU factorizations.

Definition A matrix A Rn×n is invertible if all its columns are linearly independent and, thus, Ax = 0 if and only if x = 0 for all x Rn.

The following theorem defines the Cholesky factorization of a symmetric definite positive matrix.

Theorem 4.2.1 If A Rn×n is symmetric positive definite, then there exists a unique lower triangular matrix L Rn×n with positive diagonal entries such that A = LLT .

The proof for this theorem can be found in the literature [70]. The factorization A = LLT is known as the Cholesky factorization, and L is usually referred to as the Cholesky factor or the Cholesky triangle of A. Alternatively, A can be decomposed into an upper triangular matrix U, such that A = UUT with U Rn×n upper triangular matrix.

Note how the Cholesky factorization is the first step towards the solution of a system of linear equations Ax = b with A symmetric positive definite. The system can be solved by first computing the Cholesky factorization A = LLT , then solving the lower triangular system Ly = b and finally solving the upper triangular system LT x = y. The factorization of the coe cient matrix involves the major part of the operations (O(n3) for the factorization compared with O(n2) for the system solutions), and thus its optimization can yield higher benefits in terms of performance gains.

The largest entries in a positive definite matrix A are on the diagonal (commonly referred to as a weighty diagonal). Thus, these matrices do not need a pivoting strategy in their factorization algorithms [70]. Systems with a positive definite coe cient matrix A constitute one of the most important cases of linear systems Ax = b.

The Cholesky factor of a symmetric positive definite matrix is unique. The Cholesky factorization is widely used in the solution of linear equation systems and least square problems. Although the Cholesky factorization can only be computed for symmetric positive definite matrices, it presents some appealing features, basically with respect to computational cost and storage requirements levels. These advantages make the Cholesky decomposition of special interest compared with other factorizations (e.g., the LU or QR factorizations).

4.2.1.Scalar algorithm for the Cholesky factorization

Consider the following partition of a symmetric definite positive coe cient matrix A, and its Cholesky factor L with lower triangular structure:

A = |

a21 |

A22 |

, L = |

l21 |

L22 |

, |

||

|

|

α11 |

|

|

|

λ11 |

0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

77

CHAPTER 4. LAPACK-LEVEL ROUTINES ON SINGLE-GPU ARCHITECTURES

with α11 and λ11 scalars, a21 and l21 vectors of n − 1 elements, and A22 and L22 matrices of dimension (n − 1) × (n − 1) (the symbol in the expression above denotes the symmetric part of A, not referenced through the derivation process). From A = LLT , it follows that:

|

α11 |

|

|

λ11 |

0 |

|

λ11 |

lT |

|

|

|

|

|

λ2 |

|

|

a21 |

A22 |

l21 |

L22 |

0 |

L22T |

λ11l21 |

l21l21T + L22L22T |

|||||||||

|

|

|

= |

|

|

|

|

21 |

|

= |

|

11 |

|

, |

||

so that |

|

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

λ11 |

= |

√ |

|

, |

|

|

|

||

|

|

|

|

|

|

|

α11 |

|

|

|

||||||

|

|

|

|

|

|

|

l21 |

= |

a21/α11, |

|

|

|

||||

|

|

|

|

|

A22 − l21l21T |

= |

L22L22T . |

|

|

|

||||||

Following these expressions, it is possible to obtain a recursive formulation of a scalar algorithm to calculate the Cholesky factorization, which rewrites the elements in the lower triangular part of A with those of the Cholesky factor L:

1. Partition A = |

α11 |

|

. |

a21 |

A22 |

2.α11 := λ11 = √α11.

3.a21 := l21 = a21/λ11.

4.A22 := A22 − l21l21T .

5.Continue recursively with A = A22 in step 1.

This algorithm is usually known as the right-looking algorithm for the Cholesky factorization because, once a new element of the diagonal of the Cholesky factor (λ11) is calculated, the corresponding updates are applied exclusively to the elements below as well as to the right of the calculated element (that is, A21 and A22).

With this algorithmic formulation, it is only necessary to store the entries of the lower triangular part of the symmetric matrix A. These elements are overwritten with the resulting factor L. Thus, in the operation A22 := A22 − l21l21T , it is only necessary to update the lower triangular part of A22, with a symmetric rank-1 update. Proceeding in this manner, the flops required by this algorithm is n3/3.

The major part of the calculations in the algorithm are cast in terms of the symmetric rank-1, which performs O(n2) operations on O(n2) data. This ratio between memory accesses and e ective calculations does not allow an e cient exploitation of the underlying memory hierarchy, and thus performance is dramatically limited by the memory access speed. In order to improve performance, this BLAS-2-based algorithm can be reformulated in terms of BLAS-3 operations (mainly matrixmatrix multiplications and rank-k updates). This is the goal of the blocked algorithm presented next.

4.2.2.Blocked algorithm for the Cholesky factorization

Deriving a blocked algorithm for the Cholesky factorization requires a di erent partitioning of matrices A and L. Specifically, let

A = |

A21 |

A22 |

, L = |

L21 |

L22 |

, |

|||

|

|

A11 |

|

|

|

L11 |

0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

78

4.2. CHOLESKY FACTORIZATION

where A11 and L11 are b × b matrices (with b, the block size, being usually much smaller than the dimension of A), and A22 and L22 matrices with (n − b) × (n − b) elements. From A = LLT , we then have that:

|

A11 |

|

|

L11 |

0 |

|

L11T |

L21T |

|

L11L11T |

|

|

A21 |

A22 |

L21 |

L22 |

0 |

L22T |

L21L11T |

L21L21T + L22L22T |

|||||

|

|

|

= |

|

|

|

|

|

= |

|

|

, |

which yields the following expressions:

L |

11 |

LT |

= |

A , |

|

11 |

|

11 |

|

|

|

L21 |

= A21L11−T , |

|

A22 − L21L21T |

= |

L22L22T . |

||

The blocked recursive algorithm for the calculation of the Cholesky factor of A, overwriting the corresponding elements of the lower triangular part of A, can be formulated as follows:

1. Partition A = |

A11 |

|

, with A11 being a b × b sub-matrix. |

A21 |

A22 |

2.A11 := L11 such that A11 = L11LT11 (that is, obtaining the Cholesky factor of A11).

3.A21 := L21 = A21L−11T .

4.A22 := A22 − L21L−21T .

5.Continue recursively with A = A22 with step 1.

This blocked algorithm is also a right-looking variant: after the factorization of the diagonal block A11, only the blocks below and to its right are updated. Data only needs to be stored in the lower triangular part of A. Thus, the factorization of the diagonal block, A11 := L11, and the update A22 := A22 − L21LT21 only a ect the lower triangular parts of the corresponding blocks. The cost of the blocked formulation of the Cholesky factorization is n3/3 flops, provided b n.

Blocked versions for the Cholesky factorization and other basic linear algebra routines are common in many software packages, such as LAPACK, and usually deliver high performance by invoking BLAS-3 kernels. In particular, in the blocked Cholesky factorization algorithm presented above, the calculation of the panel A21 and the update of the sub-matrix A22 can be cast exclusively in terms of BLAS-3 calls. In fact, the major part of the operations are carried out by the latter operation (a symmetric rank-b update); this operation requires O(n2b) operations on O(n2) data. Thus, in case b is significantly large, the blocked algorithm increases the ratio between operations and data accesses by a factor of b, and thus the data reutilization is improved. This is a critical factor to attain high performance on novel architectures, such as modern graphics processors, where blocked algorithms become absolutely necessary.

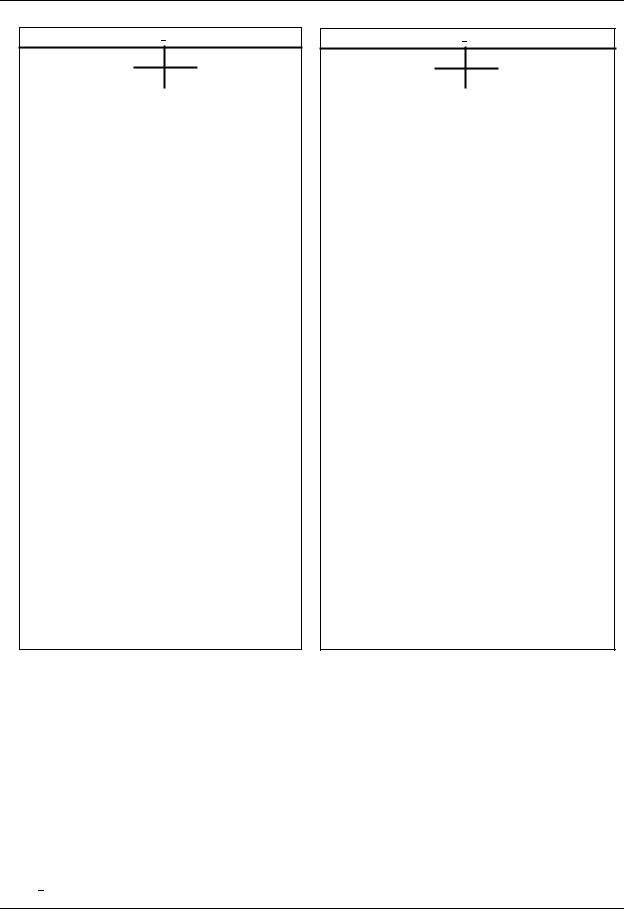

4.2.3.Algorithms in FLAME notation for the scalar and blocked Cholesky factorization

There exist di erent algorithmic variants for the Cholesky factorization. Figure 4.1 shows the three di erent algorithmic variants that can be derived for the Cholesky factorization of a symmetric

79

CHAPTER 4. LAPACK-LEVEL ROUTINES ON SINGLE-GPU ARCHITECTURES

Algorithm: A := CHOL UNB(A)

!

AT L AT R

Partition A →

ABL ABR

where AT L is 0 × 0 while m(AT L) < m(A) do

Repartition

|

AT L AT R |

|

|

|

|

|

|

T |

|

|

01 |

|

T |

|

||||||||||

|

|

|

|

|

|

|

|

|

! |

→ |

|

A00 |

|

a |

|

|

A02 |

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||

|

|

|

|

|

|

|

|

|

|

|

a10 |

|

α11 |

a12 |

|

|||||||||

|

ABL |

|

ABR |

|

|

|

||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

A20 |

|

a21 |

A22 |

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

where α |

|

is 1 |

|

1 |

|

|

|

|

|

|

|

|

|

|||||||||||

|

|

|

|

|

|

|

|

|

|

|||||||||||||||

|

|

|

11 |

|

|

|

|

× |

|

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

Variant 1: |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

α11 := √ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||

α11 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

|

a21 := a21/α11 |

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

A22 |

:= A22 − a21a21T |

|

|

|

|

|

|

|

|

|||||||||||||||

Variant 2: |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

α11 |

:= α11 |

|

|

|

a10a10T |

|

|

|

|

|

|

|

||||||||||||

a10T |

:= a10T |

TRIL |

|

A00−T |

|

|

|

|

|

|

|

|

||||||||||||

|

|

:= √ |

|

|

|

|

− |

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

α11 |

|

α11 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||

Variant 3: |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

α11 |

:= α11 − a10a10T |

|

|

|

|

|

|

|

|

|||||||||||||||

α11 |

:= √ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||

α11 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

a21 |

:= a21 − A20a10T |

|

|

|

|

|

|

|

|

|||||||||||||||

a21 |

:= a21/α11 |

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

Continue with |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

a10T |

|

α11 |

|

a12T |

|

|||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||

|

ABL |

|

ABR ! |

|

|

|

A00 |

|

a01 |

|

A02 |

|

||||||||||||

|

|

|

|

|

|

|||||||||||||||||||

|

|

|

|

|

|

|

|

|||||||||||||||||

|

|

← A20 |

|

a21 |

|

A22 |

||||||||||||||||||

|

AT L |

|

AT R |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

endwhile

Algorithm: A := CHOL BLK(A)

!

AT L AT R

Partition A →

ABL ABR

where AT L is 0 × 0 while m(AT L) < m(A) do Determine block size b

Repartition

|

|

! → |

|

A00 |

|

A01 |

A02 |

|

|||||||

|

AT L |

AT R |

|||||||||||||

|

|

|

|

|

|

|

|

||||||||

|

|

|

|

A10 |

|

A11 |

A12 |

|

|||||||

|

ABL |

ABR |

|

|

|||||||||||

|

|

|

|

|

|

|

A20 |

|

A21 |

A22 |

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

where A |

11 |

is b |

b |

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

||||||||||

|

|

|

|

|

× |

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

Variant 1: |

|

|

|

|

|

|

|

|

|

|

|

|

|||

A11 |

:= {L\A}11 = CHOL |

|

UNB(A11) |

||||||||||||

|

|||||||||||||||

A21 := A21TRIL (A11)−T |

|

(TRSM) |

|||||||||||||

A22 |

:= A22 − A21A21T |

|

|

|

|

|

|

(SYRK) |

|||||||

Variant 2: |

|

|

|

|

|

|

|

|

|

|

|

|

|||

A10 := A10TRIL (A00)−T |

|

(TRSM) |

|||||||||||||

A11 |

:= A11 − A10A10T |

|

|

|

|

|

|

(SYRK) |

|||||||

A11 |

:= {L\A}11 = CHOL |

|

UNB(A11) |

||||||||||||

|

|||||||||||||||

Variant 3: |

|

|

|

|

|

|

|

|

|

|

|

|

|||

A11 |

:= A11 − A10A10T |

|

|

|

|

|

|

(SYRK) |

|||||||

A11 |

:= {L\A}11 = CHOL |

|

UNB(A11) |

||||||||||||

|

|||||||||||||||

A21 |

:= A21 − A20A10T |

|

|

|

|

|

|

(GEMM) |

|||||||

A21 := A21TRIL (A11)−T |

|

(TRSM) |

|||||||||||||

|

|

|

|

|

|

|

|

|

|

||||||

Continue with |

|

|

|

|

|

|

|

|

|

||||||

|

AT L AT R |

|

|

A10 |

|

A11 |

|

A12 |

|||||||

|

|

|

|

|

|||||||||||

|

|

|

|

|

! ← |

|

A00 |

|

A01 |

|

A02 |

|

|||

|

|

|

|

|

|

|

|

||||||||

|

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

A20 |

|

A21 |

|

A22 |

|

|||||

|

ABL |

ABR |

|

|

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

endwhile

Figure 4.1: Algorithmic variants for the scalar (left) and blocked (right) algorithms for the Cholesky factorization.

positive definite matrix A; scalar variants are shown on the left of the figure, and blocked variants are shown on the right.

These variants have been obtained applying the FLAME methodology for the formal derivation of algorithms [138]. The lower triangular part of A is overwritten by the Cholesky factor L, without referencing the upper triangular part of A. In the blocked variants, the expression A := {L\A} = CHOL UNB(A) indicates that the lower triangle of block A is overwritten with its Cholesky factor L,

80