Dr.Dobb's journal.2005.12

.PDF

•E-mail, where recipients are defined in the application configuration of the service.

•Log database, a central repository to capture important severities from all applications for reporting and analytics.

•Windows application events, to act as a gateway to other monitoring or auditing tools if applicable.

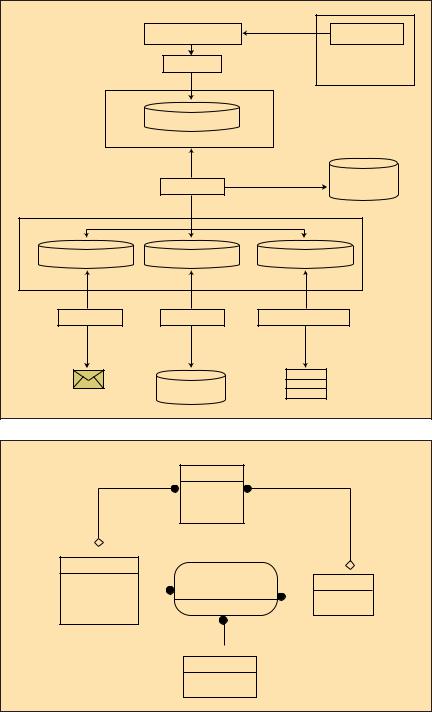

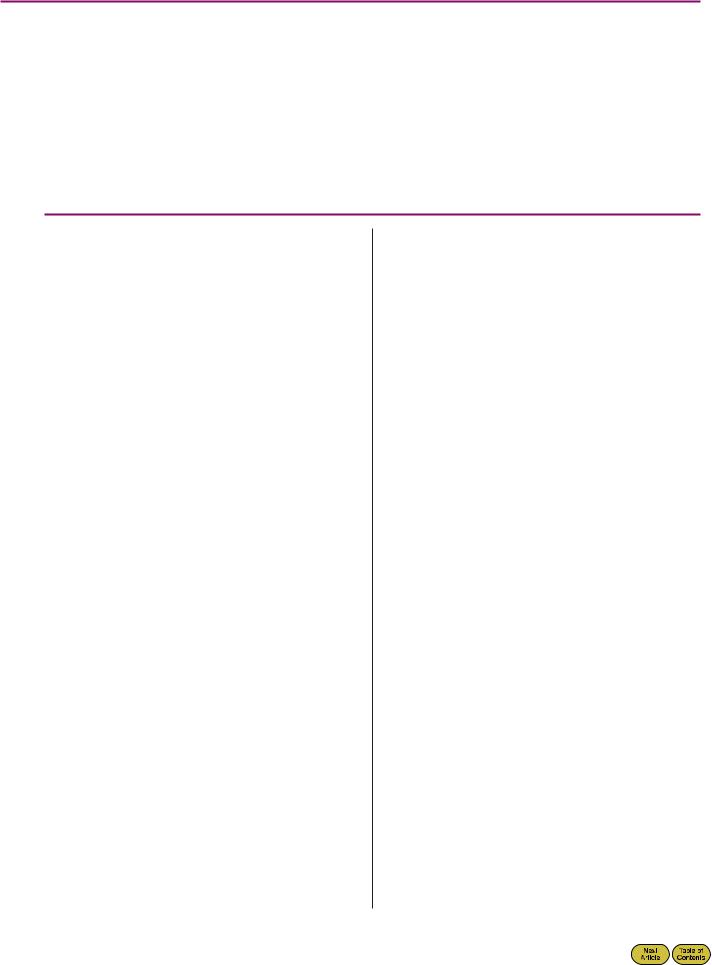

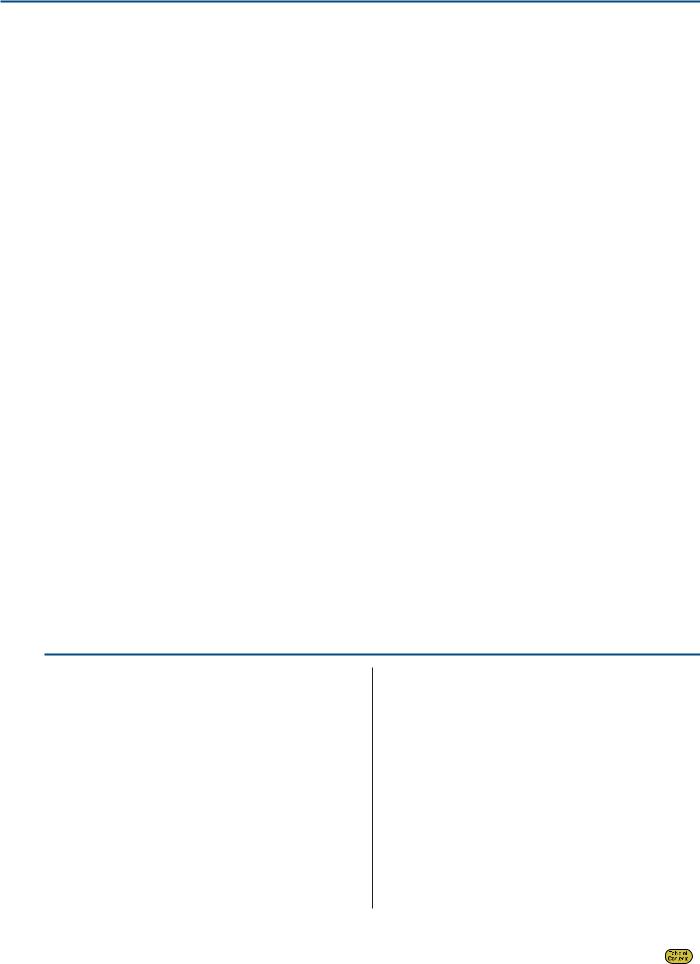

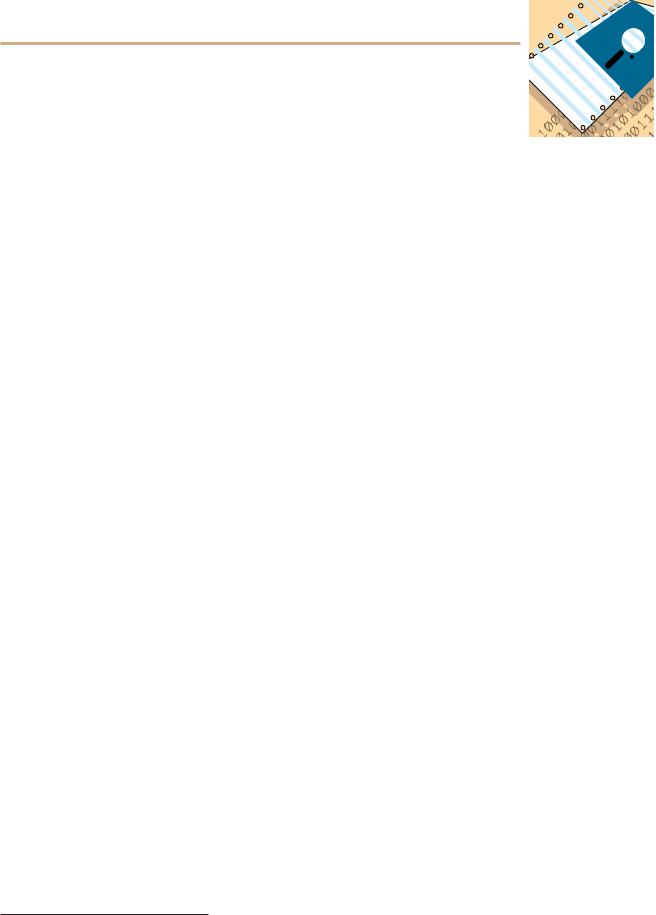

Figure 2 defines the metadatabase used for configuring individual applications to run in the log service. This is a straightforward design in which the schema consists of the core entities for configuring each application. The Application table stores information about each registered application, including e-mail information for that type of destination, and current logging levels. Severity defines the five supported levels, from INFO to FATAL. Destination defines each supported destination type and binds that to a Service Broker service. Putting it all together is the

Central_Log_Configuration table that defines what each application’s severity-to- destination mappings are.

The Service Broker

SQL Server 2005 includes a reliable messaging framework within its database engine called “Service Broker.” It has most of the common queuing functionality you would expect from messaging middleware, including support for transactions, message ordering, and the ability to scale and provide service programs, which consume messages and run multiple instances of themselves based on the load on the queue. Comparisons can be made between this and the Java Messaging Service, with those service programs acting like MessageDriven Beans (MDBs).

When opening the SQL Server Management Studio, you notice some sections in each user database; namely, a section called “Service Broker” that has some of these object types:

•Message Type. Defines the name and type of a particular message that is used by a service.

•Contract. Defines which message types to use and what the associated end points are between two services.

•Service. Defines a set of related tasks based on a particular queue and contract, and links a conversation to a queue.

•Queue. The persistent storage for messages. An internal table type.

Another important part of the broker is how it communicates. “Conversations” are the means by which messages are sent to a queue (by way of a service) and how responses are sent back to the originator

(in the case of dialogues). For this logging system, you don’t need to worry about true dialogues, only about sending/receiving messages. Although this still must be done through conversations, the implementation is simplified.

Publishing to the Central Log Queue

Assuming the database is already created, the first main Service Broker component to build is the Queue. Before executing a one-line SQL statement that does this,

however, you need to define what the XML log message that gets queued looks like; see Example 1. Application_Name and Severity_Cd must exist in their respective tables, and the application must have a destination configured for this particular severity. Now you can setup the appropriate plumbing to move this log message into a queue (including the queue object itself); see Example 2.

The WELL_FORMED_XML message type validation ensures that nonXML

|

|

Log4NET Log Publisher |

|

ADONETAppender |

|

|

|

|

Log Publisher |

|

|

|

|

|

Publish Message |

|

Log4NET Framework |

|

|

|

|

|

|

|

Queue |

Central Log Queue |

|

|

|

|

Main |

|

|

||

|

|

|

|

|

|

|

|

|

Consume Message |

|

|

|

|

|

App Config |

Configuration |

|

|

|

|

Message Router |

|

Database |

|

|

|

Route Message To Queues |

||

Destinations |

E-mail Queue |

|

Log DB Queue |

Windows Event Queue |

|

|

|

|

|

|

|

|

Consume Message |

Consume Message |

Consume Message |

||

|

E-mail Handler |

|

Log DB Handler |

Windows Event Handler |

|

|

Send Message |

|

Insert Message |

|

Post Message |

|

To Recipients |

|

To Central Log |

|

To Windows |

|

|

|

Repository |

|

Event Log |

|

|

|

|

|

Event msg |

|

|

|

Log Database |

|

Event msg |

|

|

|

|

Event msg |

|

Figure 1: Proposed logging service.

|

|

|

Central_Log |

|

|

|

|

|

|

Central_Log_Id |

|

|

|

|

|

|

Message_Date |

|

|

|

|

P |

Message |

P |

|||

|

|

|

Application_Id |

|

|

|

|

|

|

Severity_Id |

|

|

|

Application |

Central_Log_Configuration |

|||||

|

|

|

||||

Application_Id |

Application_Id |

|

|

Severity |

||

|

|

|

|

|

||

Application_Name |

|

|

|

|||

Severity_Id |

|

|

Severity_Id |

|||

Application_Desc |

|

|

|

|

||

|

|

Destination_Id |

|

|

Severity_Cd |

|

Logging_Level |

|

|

||||

Config_Desc |

|

|

||||

|

|

|||||

Email_Recipients |

|

|

Severity_Desc |

|||

Email_Admin_URL |

|

|

|

|

||

Destination

Destination_Id

Destination_Name

Service_Name

Figure 2: The metadatabase.

http://www.ddj.com |

Dr. Dobb’s Journal, December 2005 |

69 |

code doesn’t get into the queue. There are also other choices, including one that defines a particular schema to validate against. Creating a contract for the system is simplified because this is a one-way message only — no response is needed. Instead of SENT BY ANY, you specify a message type for sending by INITIATOR, and another message type for sending to TARGET. Finally, the creation of the service binds everything together.

How do you get a message published? This is done by the Log Publisher stored procedure in Listing One. Sending an XML message isn’t that much work. First, a dialog started against the LogService defines which queue the message goes into and which contract is used for the conversation. You still need to define a from/to service, but because this isn’t a true dialog, they can be the same. A unique message handle is received from this statement. That handle is then used to send the message.

Log messages sit in the queue until they are consumed by a service program, which attaches itself to the queue and receives the messages for processing. In this case, the processing is simply publishing to another queue.

Routing Messages

To Destination Queues

Once messages are in the Central Log Queue, they are received by a program that— based on the application and severity— routes that message down to a destination queue. To do this, you’ll notice new Service Broker syntax in SQL. A basic SQL statement to receive (remove) a message off a queue looks like this:

RECEIVE cast(message_body as nvarchar(max)) FROM [CentralLogQueue]

If you want to block on receive until a message arrives, then you can wrap the aforementioned code in a WAITFOR statement and define a time interval to fall through:

WAITFOR

(

RECEIVE cast(message_body as nvarchar(max)) FROM [CentralLogQueue]

), TIMEOUT 5000

Armed with these basic statements to retrieve messages, you can now build the most complex part of the system — the Router stored procedure in Listing Two. The core algorithm is:

1.Get a message off the queue.

2.Look up the application’s configuration and determine what destinations to publish the message to based on the severity of the message.

3.For each destination (service), create a dialog and send the message.

Once the message arrives, OpenXML( ) returns the application and severity. From this, you can query the configuration tables to return the destination and service names you need to publish to. On each destination, you dynamically bind the service name to the begin dialog, and using the same LogServiceContract used for the original Publisher procedure, you send the exact same LogRequest message you received to the destination queues defined by the service name. Note the use of LogServiceGUID. At the beginning of this procedure, you cache this value. If you were hosting the same service (same name) on multiple databases, you would need to choose which GUID to use.

Once you have the router that will keep listening for messages, it’s invocation time. You can run the thing via command line

<ROOT>

<CentralLogMessage> <Application_Name>APP_TEST</Application_Name> <Severity_Cd>INFO</Severity_Cd> <MessageDateTime>1/1/2005 12:12:12</MessageDateTime>

<Message>This is an INFORMATIONAL TEST message!</Message> </CentralLogMessage>

</ROOT>

Example 1: Defining an XML log message.

CREATE MESSAGE TYPE [LogRequest] VALIDATION = WELL_FORMED_XML

CREATE CONTRACT [LogServiceContract] AUTHORIZATION [dbo] ([LogRequest] SENT BY ANY)

CREATE QUEUE [dbo].[CentralLogQueue] CREATE SERVICE [AppEventLogService]

AUTHORIZATION [dbo]

ON QUEUE [AppEventDestinationQueue] ([LogServiceContract])

Example 2: Moving a log message into a queue.

to test it, but there’s a better way to do this in production — let the queue manage it:

ALTER QUEUE [CentralLogQueue] WITH

ACTIVATION ( STATUS =

ON, PROCEDURE_NAME =

[SPRouter], MAX_QUEUE_READERS =

10 , EXECUTE AS N'dbo' )

The queue will automatically activate SPRouter once a message is published. As the load on the queue increases, more instances of the service program are invoked up to the max readers (in this case, 10). Once these programs are running, however, they cannot be shut off unless the queue is disabled. If the load on the queue decreases, altering the activation status to OFF keeps new instances of the program from running (if max isn’t hit), but will not shut down live ones. This functionality exists to keep the broker from killing processes in midstream (because it probably wouldn’t know if the program is waiting for a message or churning through one). If the broker’s event model was easily exposed and delegation was used to invoke the service program, you would not need to continually return to the waitfor. This would let the engine adjust the instances downward without concern over where the process is.

Destination Service Programs

Using the main algorithm from the Router, you can cut out the middle part (sending to another queue), replacing it with the specific code to send e-mail, insert into the Central Log Database, and post to the window’s event log. Listings Three, Four, and Five (all available electronically) accomplish these tasks, respectively, although you may have your own methods for e-mail and event logging.

Testing the Service Using Log4NET

Apache’s Log4NET (http://logging.apache

.org/log4net/) is an open-source logging framework based on the Log4j project. The main goal of the framework is to allow easy inclusion of logging into applications, with the ability to define many destinations for outputting the logs, and filtering them based on severity. Within five minutes, you can introduce minimally a console-based logging mechanism into your .NET application. With a little more effort, you can extend that to such destinations as rolling files, SMTP, remoting, Window’s event logs, and databases, which is where the integration point with our Central Logging System occurs.

Log4NET uses appenders to define destination paths to send log messages to. One such appender is ADONetAppender, which allows the writing of logs

70 |

Dr. Dobb’s Journal, December 2005 |

http://www.ddj.com |

to a database either through defined SQL or stored procedures. Say you want any severities of Warning, Error, or Fatal to be sent to our Enterprise Logging Service (and keep the verbose logging of INFO and DEBUG on the client side only). What is needed is to define in the application’s configuration file an instance of the ADONetAppender that maps to the Log Publisher stored procedure. This creates that bridge into the Central Log Queue.

Because the Log Publisher expects an XML Log Message, you need a translation

of the Log4NET’s log class prior to calling the procedure. To ensure all the messages are translated the same from any number of Log4NET implementations, you do this in a Log4NETLogPublisher stored procedure; see Listing Six (available electronically). The ADONetAppender configuration that defines the necessary plumbing needed to pass the log fields into the stored procedure is defined below that. The @ApplicationName is passed in here, as well as the level being set to WARN. In the Central Logging configuration, you need to have at least one destination

mapped to WARN, ERROR, and FATAL for this application.

Conclusion

For organizations looking to upgrade to SQL Server 2005 and do more in respect to enterprise-application logging and exception handling, this project could act as a conduit for exploring the feature set of Microsoft’s new Service Broker to produce a trimmed-down but functional and scalable logging service.

DDJ

Listing One

--This procedure will receive an XML log record and submit to the Central

--Queue via the Log Service.

CREATE PROCEDURE [dbo].[SPLogPublisher] @msgXML [nvarchar](max)

WITH EXECUTE AS CALLER AS

declare @msgHandle uniqueidentifier

begin transaction

begin dialog conversation @msgHandle

from service [LogService] to service 'LogService' on contract [LogServiceContract];

send on conversation @msgHandle message type [LogRequest] (@msgXML) end conversation @msgHandle

commit

Listing Two

CREATE PROCEDURE [dbo].[SPRouter]

WITH EXECUTE AS CALLER

AS

--This is a Service Program that will receive Log Messages as xml from the

--Central Queue

--(LogService) and route them depending on the Log_Configuration metadata to

--the appropriate

--Destination Queues (App, DB and/or Email Services).

--

--Workflow:

--a) receive an xml log message from the CentralLogQueue

--b) for each destination_id that is setup in the Log Configuration for the

--Application_Name and Severity_Cd tags in the message log, post the

--xml log message to that destination queue.

-- |

|

|

declare @conversation_handle |

uniqueidentifier |

|

declare @message_body |

|

nvarchar(MAX) |

declare @message_type_name |

sysname |

|

declare @xml_doc_handle |

int; -- used by XPath to create a doc in memory |

|

declare @xml_doc |

varchar(MAX); -- receives xml doc from the sp_preparedoc call |

|

--database ID for these services (assume they all exist in same database) declare @LogServiceGUID uniqueidentifier;

--store the results of this xml log into a temp table for querying to find

--destinations

declare @xml_log_app_and_severity table ( application_name varchar(32), severity_cd varchar(32) )

-- will store each destination and service that this log is going to goto declare @destinations table ( destination_name varchar(32),

service_name varchar(32) )

--used for working on individual rows from the destinations table above declare @destination varchar(32)

declare @service varchar(32) declare @msgHandle uniqueidentifier

--cache this for use when publishing the messages to destination queues SELECT @LogServiceGUID = service_broker_guid

FROM sys.databases

WHERE database_id = DB_ID('LogService');

--Keep this alive. Else the broker will call this procedure for every message. while ( 1=1 )

begin

-- receive the next message off the queue waitfor

(

receive top(1) @message_body=message_body,

@message_type_name=message_type_name, @conversation_handle=conversation_handle

from [CentralLogQueue]

)

-- insure the message is of the proper type (should ALWAYS be) if @message_type_name = 'LogRequest'

begin

-- setup an internal representation of this XML doc

exec sp_xml_preparedocument @xml_doc_handle output, @message_body

--get the destination names based on application and severity of this log

--and load into temp destination table insert into @xml_log_app_and_severity select application_name, severity_cd

from OpenXML(@xml_doc_handle, '/ROOT/CentralLogMessage',2)

with (Application_Name varchar(64), Severity_Cd varchar(32))

-- lookup the destination(s) via the configuration insert into @destinations

select d.destination_name, d.service_name

from destination d, central_log_configuration clc, application a,

severity s, @xml_log_app_and_severity x

where x.application_name = a.application_name and x.severity_cd = s.severity_cd and clc.application_id = a.application_id and clc.severity_id = s.severity_id and clc.destination_id = d.destination_id and a.logging_level >= s.severity_id

-- publish the log to each destination by iterating through the table declare cDestinations cursor fast_forward for select * from @destinations

open cDestinations

fetch next from cDestinations into @destination, @service

while @@fetch_status = 0 begin

--publish message to designated service

--@destination name is only needed for debugging purposes begin transaction

begin dialog conversation @msgHandle from service @service

to service @service, @LogServiceGUID on contract [LogServiceContract];

send on conversation @msgHandle message type [LogRequest] (@message_body)

end conversation @msgHandle commit transaction

--get the next row (service) to publish this message too fetch next from cDestinations into @destination, @service

end

close cDestinations deallocate cDestinations

-- clean up temp tables

delete from @xml_log_app_and_severity delete from @destinations

-- clean up

exec sp_xml_removedocument @xml_doc_handle

end else begin

-- wrong type of message, or an error occurred.

print N'Central Logging: Invalid message type received for DIALOG HANDLE: ' + cast( @conversation_handle as varchar(256) ) + N' XML MESSAGE: ' +

cast( cast( @message_body as XML ) as varchar(MAX));

end

end -- loop

DDJ

http://www.ddj.com |

Dr. Dobb’s Journal, December 2005 |

71 |

E M B E D D E D S Y S T E M S

Memory Management &

Embedded Databases

Building an infrastructure with optimization in mind

ANDREI GORINE

AND KONSTANTIN KNIZHNIK

Embedded databases in general, and in-memory databases in particular, are especially dependent on the quality of their memory-management algo-

rithms. Designed for use in resourceconstrained embedded systems, the features, performance, and predictability of these databases depend heavily on the efficiency of algorithms for allocating precious memory. The performance cost of general-purpose allocators, such as the Windows C runtime or glibc allocator, is prohibitive for many embedded applications, and the memory overhead is often excessive. As a result, many embedded applications, including database management systems, implement custom memory managers for optimization. (In this article, we use the term “application” to refer to various programming tasks. The application, for example, can be a filesystem or operating-system kernel.) A single database system often utilizes numerous allocation algorithms to address specific internal tasks such as infrastructure for data layout, heap management, and SQL parsers and optimizers.

Generally speaking, allocators keep track of which parts of memory are used and which are free. The design goal of any allocator is to minimize wasted memory space, balancing the amount of wasted space against the processing time required to recover it. A major target of allocators is to limit or mitigate the fragmentation that occurs when applications free memory blocks in any order. De-

Andrei is principal architect of McObject. Konstantin is a software engineer with Borland. They can be reached at gor@ mcobject.com and konstantin.knizhnik@ borland.com, respectively.

fragmentation strategies employed by general-purpose allocators impose CPU overhead that is often prohibitive for embedded systems, while custom memory managers can offer lightweight defragmentation more suited to embedded applications’ resource constraints and required short response times.

In this article, we examine generalpurpose and custom memory-allocation strategies, illustrating their usage and advantages/disadvantages generally as well as their applicability for particular database management programming tasks. Many of the concepts derive from our experience creating the eXtremeDB in-memory database and its eXtremeSQL extension (http://www.mcobject.com/).

List Allocators

List allocators are perhaps the most widely used and well-known allocator algorithms, and often form the foundation for general-purpose memory managers that handle unpredictable allocation patterns. This type of algorithm organizes a pool of contiguous memory locations (often called “free holes”) into a singly linked list. The allocator services a request for memory allocation by traversing the list looking for a large enough hole. Several strategies are possible when searching for an area that satisfies the request for memory allocation of a given size. These include first-fit searches, in which the allocator walks a linked-list to find the first available memory hole; next-fit, which begins searching where a previous search left off, rather than from the beginning of the list; and quick-fit, in which the allocator uses its own list of common memory sizes to quickly allocate a block of memory that is large enough (but perhaps larger than needed).

Almost all list allocator implementations suffer from a fragmentation problem. If an application intensively allocates and frees objects of different sizes and different lifetimes, then, in time, the list will only contain a large number of small holes. To battle fragmentation, list algorithms usually implement techniques that merge small holes together, or that sort the list by hole size, enabling the first fit algorithm to quickly locate a free hole that best matches the allocation request size. But efficient

defragmentation techniques come at the price of extra per-object overhead. Defragmentation’s performance and per-object memory overhead must be balanced against any gains in efficient memory use. Often the more task-specific allocators we describe here can provide greater efficiency than generic list allocators.

“List allocators are perhaps the most widely used and well-known allocator algorithms”

Block Allocators

List-based allocators’ per-object overhead can be prohibitive in resource-constrained embedded environments. Many applications tend to allocate a large number of small objects of the same size. Typical examples of such allocations include small scalar values such as date/time, and abstract syntax tree nodes used by various parsers. Block allocators handle such objects very efficiently with minimal overhead, and eliminate fragmentation by design.

The idea of a block allocator is straightforward. A block allocator is given a quantity of memory (we’ll call this the “large block”), divides it into equal-size pieces, and organizes them in a linked-list of free elements. To serve a request for memory, the allocator returns a pointer to one piece and removes it from the list. When there are no more elements in the “free list,” a new large block is selected from the memory pool using some other allocator (such as a list allocator). The new large block gets divided by the block allocator, elements are put into a new linked-list, and allocation requests are handled from this newly created list. When an object is freed, it is placed back into its original “free list.” Because all allocated objects in a given list are of the same size, there is no need for the block allocator to “remember” each element’s size, or to locate neighboring

72 |

Dr. Dobb’s Journal, December 2005 |

http://www.ddj.com |

chunks to merge them. Listing One implements a simple block allocator.

In other cases, more complex implementations of the block allocator algorithm are justified to improve memory-management efficiency. Often, application processing is divided into multiple stages. Objects allocated at each of these stages are not necessarily needed during subsequent stages. The block allocator used during each particular stage can be designed to return the unused blocks back to the memory pool.

For example, a compiler includes three distinct processing phases:

•Parsing, in which the compiler builds the abstract syntax tree (AST).

•Analyzing language statements and mapping them to an internal representation.

•Generating machine code.

The block allocator is well suited to manage the compiler’s allocations, which contain a large number of similar objects, such as AST nodes (parsing results). With the simplest block allocator, however, the compiler would not be able to reuse the space allocated for the AST nodes, even though they are not needed during the subsequent stages. However, a slightly more sophisticated implementation would free the AST at the end of the first phase, allowing the compiler to reuse the memory in the second and third phases.

The most basic block allocators satisfy allocation requests only for objects that fit into their predetermined element size, making such algorithms useful only when the allocation pattern is known in advance (for example, when the application always allocates 16-byte objects). In practice, many memory managers, including database memory managers, need to satisfy requests of several allocation patterns. To utilize the simplicity advantage of the block allocator algorithm while meeting the application’s need to allocate memory in variously sized chunks, a block allocator is often combined with some other technique into a hybrid memory manager. For example, the block allocator can maintain multiple lists of different-sized elements, choosing the list that is suited for a particular allocation request. Meanwhile, the blocks themselves and other large objects — those that ex-

ceed the chunk size of any of the blocks— are allocated using another generalpurpose allocator (for example, a page allocator or a list allocator). In such an implementation, the number of allocations (objects) processed by the block algorithm is typically orders of magnitude times higher than those made by the general-purpose malloc( ), resulting in startling performance improvements compared to using malloc( ) on its own.

In such a hybrid memory manager, the large block size depends on the typical allocation request and can be chosen by the application. To better illustrate the algorithm’s functioning and demonstrate one practical approach, assume the block size is 512 bytes. Also assume the minimum size ever desired for allocation is 16 bytes and that objects are aligned on 8-byte boundaries. This alignment assumption is reasonable since on many hardware architectures the data alignment is an absolute requirement, and many others perform aligned data access much faster.

Objects that are larger than one-half of the block size are allocated by a generalpurpose allocator. For objects smaller than one-half of the block size, the memory manager uses one of the block allocator chains. But how many blocks should the memory manager maintain, and what are the optimal sizes of the elements within these blocks?

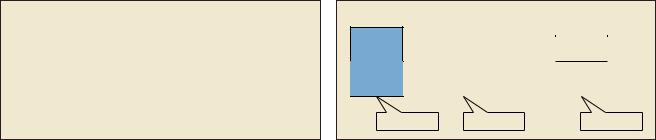

The maximum size of the object allocated by the block allocator is 256 and there can be exactly two such objects allocated from the block. Let’s call this chain a “256 chain.” To be able to allocate three objects out of a block, the element size would be 168 — let’s call it a “168 chain.” There is no sense in creating separate chains for 250 or 160 sizes, since they would require the same number of blocks as our 256 and 168 chains, respectively. If the process is continued further, it creates 13 chains with 512/4, 512/5,…512/16 chunk sizes (see Figure 1).

The two tables in Example 1 describe the data layout in Figure 1. The first array specifies the block and the second specifies the sizes of the block elements. Note that since we assumed 8-byte alignment, it’s possible to divide the aligned object size by 8 and reduce the dimension of the arrays to 32 instead of 256.

Listing Two implements the allocate( ) and free( ) methods. Note that the allocator keeps the size of the object in a hidden header field within the object. This information allows the allocator to free the object by passing only the object pointer to the free( ) method.

It is possible (although not necessarily practical) to avoid the extra object header overhead by requiring the blocks to be aligned on the block size (512 bytes in our example). It would also require reserving some space for each block within the block itself (block_header below) that would contain the block size. The size of the element would then be calculated like this:

void free(object* obj){

size_t size = ((block_header*) ((size_t)obj & ~(block_size-1))->size;

...

}

This would also reduce the maximum size of the object that could be allocated from a page — it would only be possible to keep two 248-byte objects.

Stack-Based Allocators

Memory allocators would be much simpler to design and exhibit higher performance if they only had to allocate objects and not free them. Given a block of memory, such an allocator would just advance a pointer, verifying there is enough space left in the block to satisfy an allocation request. Moreover, such an allocator would impose no memory overhead. The downside of this policy is obvious — if the memory manager is not guarding, the application could run out of memory. However, some embedded applications benefit from incorporating custom allocators that allocate objects but never release them. This approach is justified when the number of allocations and/or the total amount of required memory is known in advance and limited.

A slight variation on this algorithm is particularly useful when memory requirements can be divided into a first phase in which objects are allocated, and a second in which they are deallocated. In this case, the stack pointer can simply be rewound, effectively deallocating the objects. This allocation pattern is typical for a SQL engine, which needs to free the

const int storage::block_chain[32] = { |

|

|

|

Block_0 |

||||||||||||

0, |

0, |

|

1, |

2, |

3, |

4, |

|

5, |

|

6, |

7, |

8, |

|

|

||

9, |

9, |

10, |

10, |

10, |

10, |

11, |

11, |

11, |

11, |

|

|

|||||

11, |

12, |

12, |

12, |

12, |

12, |

12, |

12, |

12, |

12, |

|

|

|||||

12, 12 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

}; |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

const int storage::block_size[32] = { |

|

|

|

|

||||||||||||

16, |

16, |

24, |

|

32, |

40, |

48, |

56, |

|

64, |

72, |

80, |

|||||

96, |

96, |

128, |

128, |

128, |

128, |

168, |

168, |

168, |

168, |

|||||||

168, |

256, |

256, |

256, |

256, |

|

256, |

256, |

256, |

256, |

256, |

||||||

256, |

256 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2x256 Chunks |

}; |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Block 1 |

|

Block 13 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3x168 Chunks |

32x16 Chunks |

Example 1: Description of the data layout in Figure 1. |

Figure 1: Data layout. |

http://www.ddj.com |

Dr. Dobb’s Journal, December 2005 |

73 |

memory allocated while parsing a SQL statement, but only deallocates once the statement is processed.

A memory manager built on this twophase strategy can be highly efficient, yet allows reuse of the memory pool. It maintains a pointer to the current position in a memory block to allocate objects within the block. To allocate an object, the pointer is incremented by the requested size, and the old value of the pointer is returned to reference the allocated memory. When there is no more space available in the current block, a new block is allocated out of the application’s memory pool. This is done by some generalpurpose allocator such as the standard malloc/free. Blocks used in this process are kept in a linked-list.

The deallocation part of the algorithm is also quite trivial to implement, and fast in execution: All blocks are simply released using the general-purpose deallocator (for instance, free( )).

The simple stack allocator in Listing Three (available electronically; see “Resource Center,” page 4) implements the two-phase strategy previously described. It starts with a fixed amount (block) of memory. The allocator maintains and the application marks (remembers) the current stack position pointer that identifies a segment on the stack associated with the processing. When the application completes the processing and no longer needs the objects, it releases (frees) the stack segment, causing the allocator to reset the stack pointer back to the mark. While the current process is still active, the application may initiate another operation that also uses the allocator. When this starts, the allocator marks the current stack position that identifies a new segment for use. The stack segments never overlap: The application never allocates more objects from the first segment until the second segment is removed from the stack (see Figure 2). The deallocation of segments is done in LIFO order; the segments marked last must be deallocated first.

The allocation/deallocation pattern just described may sound impractical for many applications, due to the rather narrow range of tasks that it can serve. However, in the context of a relational database engine, this pattern is typical. For example, when evaluating a condition, the temporary results of subexpression evaluations need only be kept until the condition evaluation is com-

plete. The stack can be marked before starting to evaluate a condition, and reset to the mark after results are returned.

Stack allocators are superior when an application, as a natural byproduct of its operation, keeps track of the order in which objects are allocated. For some applications, such as those that perform recursive processing, a stack-based allocator simplifies design and improves performance. A database engine is a vivid example, since many of its algorithms use recursion. If the engine uses an allocator that requires explicit deallocation of individual objects, it must track each object’s lifespan, as well as references to the object by other objects. Failure to do this results in memory leaks and infamously “wild” dangling pointers. In contrast, setting a mark on the stack before processing a statement, and rewinding to the mark after processing, is as simple and fast as it gets.

When the stack allocator is used, a SQL engine controls exactly how objects are allocated. Therefore, it is able to enforce the LIFO order of allocation/deallocation procedures. However, when it is necessary to use objects created inside the SQL engine outside its scope, it is not always possible to preserve the LIFO order. For example, in a SELECT statement, an application executes a query and starts iterating over the result set allocated by the engine via its stack allocator. While processing the result set, the application can execute another query, then close the first result set and start processing the second one. Two result sets overlap on the stack and the database is not capable of maintaining the LIFO order for allocation/deallocation procedures.

To handle this scenario, the allocator algorithm can be extended by keeping an identifier (an integer number) of the stack segment that is currently being used (the second result set segment in our example). The allocator also maintains a count of the stack segments. When the application attempts to free one of the segments, the allocator compares the identifier of the current segment with the one being deallocated. If the identifiers are equal, the allocator resets the stack. Otherwise, the counter is decremented and the reset is postponed until the count is zero. In our example, the stack is only reset when both result sets are closed. Again, Listing Three illustrates the allocator.

Stack-based allocators are fast and impose very little overhead. For data management, the stack model can be used to allocate short-lived objects that can be released all at once — during SQL statement execution — for example, when all memory is released upon the statement commit or rollback. A useful byproduct of this approach is improved safety: With a stackbased allocator, it is impossible to accidentally introduce a memory leak through improper deallocation because the application need not track individual allocations.

Thread-Local Allocators

Memory allocation performance in multithreaded environments is a challenge for any application. In particular, multithreaded applications running on multiprocessor systems can bog down when performing many allocations, with lock contention in the default allocator causing the bottleneck.

To synchronize access to its internals, the C runtime allocator (malloc( )/free( ))

uses a mutex that is signaled every time the allocator is used. By itself, the mutex is not all that expensive, in performance terms. On most OSs, it is implemented via an atomic check-and-set instruction. However, resource conflicts arise when multiple threads running on different CPUs attempt to access the allocator concurrently: Each thread attempts to acquire the mutex, creating a lock conflict. To resolve the conflict, the OS has to do a context switch, suspend the thread that attempted to access the allocator, and insert it into the kernel’s waiting queue. When the allocator is released, the current thread is allowed to run and access the allocator. The large number of context switches degrades performance. (Without addressing the underlying issue, the same application would perform much better on a single CPU.)

One way to resolve these conflicts is to create an allocator that simply avoids them. Most modern operating systems support the concept of per-thread storage, or a memory pool that is assigned to an individual thread. A thread allocator associates a memory block with each thread and services the thread’s memory requests from this block, without interfering with other threads’ allocation requests. When the thread allocator runs out of memory, the default allocator assigns it another block. Obviously, the number of lock conflicts is significantly decreased.

|

Segment_1 |

Segment_2 |

Mark_1 |

Mark_2 |

Mark_3 |

Figure 2: The stack segments never overlap.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

S Bytes Long |

|

|

|

|

|

|

|

|

||||

|

1 1 0 0 |

. . . |

1 0 0 . . . 0 0 1 |

|

|||||

|

|

|

|

|

|

|

QS zeros |

|

|

|

|

|

|

|

|

|

|

|

|

Figure 3: The allocator searches its bitmap.

74 |

Dr. Dobb’s Journal, December 2005 |

http://www.ddj.com |

One scenario requiring special attention is threads sharing objects: An object allocated in one thread is later freed in another. The thread allocator handles this by detecting the attempt to free the object and redirecting this request to the thread that performed the original allocation. This could take the form of linking all objects allocated by one thread into a linked-list. This would require a mutex to synchronize access to the list, so this mechanism would be efficient only if the number of objects migrating between threads is relatively small compared to the number of stationary objects. It should be mentioned that even intentionally allocating objects in one thread and freeing them in another can result in sharing of cache line among processors, and its attendant performance degradation.

In database systems, the “stay-at-home” behavior of objects, discussed earlier, is usually enforced by the DBMS itself. Each thread performs its database access in the context of a database transaction, and these are isolated from one another by the database transaction manager. Thus, in database design, it is often possible to entirely skip the issue of migrating objects in the allocator. A stack-based allocator (such as those described in this article) usually represents a good choice for implementing a thread-local allocator. The combination of the stack and the thread-local storage allows development of synchronization-free memory managers that avoid lock contention when accessing memory, resulting in improved database performance on multiprocessor systems.

Bitmap Allocators

A bitmap allocator acts on a memory pool by allocating objects in prespecified units (an allocation quantum) such as a word or a double-word. A bitmap is a vector of bitflags, with each bit corresponding to one quantum of memory. A bit value of 0 indicates a free quantum, while 1 indicates

an allocated quantum. The memory overhead — the space required to keep the bitmap itself — is modest. For example, if the quantum size is 32 bytes, the required bitmap size is 256 times smaller. Thus, to map 1 GB of space, the required bitmap size is just 4 MB. Given this example, to allocate an object of size S, the allocator must locate a contiguous area of free memory that contains QS=(S+31)/32 quantum blocks. The allocator does that by searching its bitmap to find a zero bits sequence QS long and turning over the bits once this sequence is found (Figure 3).

One of the common ways to improve bitmap allocator performance is to search for free memory (a hole) by looking up the bitmap starting from the bitmap position where the previous lookup was left off, rather than from the beginning of the bitmap. When the allocator locates the free hole, the current bitmap position is updated. In addition to its speed advantage, this technique increases the locality of reference (objects allocated one after another are positioned sequentially in memory). Another way to speed up the bitmap lookup is to replace bit-by-bit scans with more efficient techniques. It is possible to scan the bitmap bit-by-bit (see Listing Four, available electronically).

However, the bitmap can be more efficiently scanned one byte at a time using 256-way lookup tables to detect a free hole of the desired length (Listing Five, available electronically).

The first table specifies the number of leading zeros in each byte; the second table represents the number of trailing zeros in each byte. The last two arrays specify the number and the offset of the longest sequence of clear bits in the byte. Using these tables, the allocator can scan the bitmap as in Listing Six (available electronically).

Bitmap allocators have a number of advantages. One feature that is especially important in database development is that the

bitmap mechanism can allocate a group of objects sequentially, thus maintaining locality of reference. In database development, the increased locality of reference means that objects allocated sequentially would be placed on the same database page. Consequently, these objects would be loaded into memory using just one read operation from disk, in contrast to multiple reads that would be required to fetch the objects if they were located in different parts of the storage. For a main memory database, locality of reference is less critical — after all, memory is a randomly accessed device. However, modern systems often include a secondary cache (L2 cache) that benefits from increased locality of reference as well.

Furthermore, the bitmap allocator can keep fragmentation at bay. When objects are allocated by a quantum that is comparable to their own size, small unused holes (such as those accumulated by linked-list allocators) are never created.

Another performance advantage lies in the fact that bitmaps themselves are not interleaved with main storage, which improves the locality of searching. The locality of writes may also be improved because freeing objects only modifies the bitmap.

Apart from database systems, bitmap allocators are quite commonly used in garbage collectors, database dump utilities, and filesystems’ disk block managers — areas where enforcing locality of reference and reduced fragmentation are imperative.

Conclusion

For developers of database systems, filesystems, compilers, and similar applications, creating custom memory managers rather than using an operating environment’s existing allocators entails extra coding — sometimes a great deal of it. But such allocators, once written, establish an infrastructure that permits optimization.

DDJ

Listing One

template<class T>

class fixed_size_object_allocator { protected:

T* free_chain; public:

T* allocate() {

T* obj = free_chain; if (obj == NULL) {

obj = new T(); } else {

free_chain = obj->next;

}

return obj;

}

void free(T* obj) { obj->next = free_chain; free_chain = obj;

}

fixed_size_object_allocator() { free_chain = NULL;

}

~fixed_size_object_allocator() { T *obj, *next;

for (obj = free_chain; obj != NULL; obj = next) { next = obj->next;

delete obj;

}

}

};

Listing Two

class BlockAllocator

{

void* allocate(size_t size)

{

if (size + sizeof(object_header) <= page_size/2)

{

int n = block_chain[((size+sizeof(object_header)+7)>>3)-1]; storage_free_block* bp = hdr->free_block_chain[n];

if (bp != NULL) { hdr->free_block_chain[n] = bp->next; bp->size = size;

return (object_header*)bp+1;

}

}

// allocate a new block using some external allocator return alloc_block(size);

}

void free(object* obj)

{

object_header* hp = get_header(obj);

if (hp->size + sizeof(object_header) <= page_size/2)

{

int n=block_chain[((hp->size+sizeof(object_header)+7)>>3)-1]; storage_free_block* bp = (storage_free_block*)hp;

bp->next = hdr->free_block_chain[n]; hdr->free_block_chain[n] = bp;

}else {

// return the block back to the memory pool free_block(hp);

}

}

};

DDJ

http://www.ddj.com |

Dr. Dobb’s Journal, December 2005 |

75 |

P R O G R A M M I N G P A R A D I G M S

New Orleans Memories

Michael Swaine

What has happened down here Is the winds have changed Clouds roll in from the north And it started to rain

—Randy Newman, “Louisiana 1927”

I’ve only visited New Orleans twice, once at the terminus of a 4000-mile camping trip with Nancy and once with other Dr. Dobb’s staff for the fourth annual ACM conference on Object-Oriented Programming, Systems, Languages & Applications — OOPSLA ’89, in other words. One trip was in the year of the Oakland Hills fire, at that time, the worst urban disaster in U.S. history; the other in the year

of the Loma Prieta earthquake.

The two trips blur into one musicand food-suffused montage. Street scenes and snatches of Dixieland, faces and voices, all come back to me stripped of context: fragmentary images that bob to the surface and float away.

A colorfully dressed stranger in the French Quarter offering to bet me that he could guess where I got my shoes. A young woman with braided hair sitting on the sidewalk on Magazine Street playing a saw. Tiny boys who don’t look like they’ve been walking all that long tap-dancing in the street as tourists weave around them. A trumpet player in a baby-blue sportscoat sitting across the table from me and talking about software development.

The trumpet player is also a piano player, composer, ventriloquist, short-story writer, cartoonist, C programmer, Dr. Dobb’s columnist, and friend. Al Stevens calls Cocoa Beach, Florida, home — but either there or here in New Orleans, the babyblue sportscoat looks appropriate. Al’s in town for OOPSLA, too.

I remember that dinner, and can almost pick out all the faces around the table. It was, like every meal I ever ate in New Orleans, memorable. Many of my memories of New Orleans involve food.

Eating beignets for breakfast, getting powdered sugar in my beard. The best beignets, I’m told, are to be found at CDM, the famous Cafe Du Monde, operating in the French Quarter since 1862. Right on the bank of the Mississippi River.

“Nowhere,” John McPhee wrote in an essay two years before that OOPSLA con-

Michael is editor-at-large for DDJ. He can be contacted at mike@swaine.com.

ference, “is New Orleans higher than the river’s natural bank…The river goes through town like an elevated highway.”

Chicory and Po-Boys

In National Geographic

And the Times-Picayune They forecast the apocalypse Said it was coming soon

— David Rovics, “New Orleans”

I’m a coffee drinker and I want to like New Orleans coffee, which by unvarying tradition is always cut with chicory. Maybe it’s an acquired taste. Maybe it just doesn’t pack enough caffeine kick for my longestablished habit. As I am a reader by even longer-established habit, I read the street signs: Abundance, Pleasure, and of course Desire. There’s a bus line here called “Cemeteries.” I read the brochures, read that the chicory tradition began during the Civil War, when New Orleanians stretched war-scarce coffee with the roasted and ground root of the locally harvested endive plant in response to the Union blockade of the Port of New Orleans.

In the century and a half since the Civil War, the Port of New Orleans had grown to become the largest port in the United States and the fifth largest port in the world. Until September 2005.

I remember eating po-boys for lunch. Po-boys are something like submarine sandwiches, crunchy-crusted French bread with just about any filling, served plain or dressed. But it is specifically oyster poboys that I remember with such fondness.

Seafood and fresh-water fish or shellfish are a big part of New Orleans cuisine. I remember snacking on crawdads spilled out on newspaper, taking a taxi to a dinner that I am pretty sure featured fish, in a good restaurant in an old mansion in the Garden District. I understand that this area was among the least affected by the storm and its aftermath. “In New Orleans,” McPhee wrote in 1987, “income and elevation can be correlated on a literally sliding scale: the Garden District on the highest level, Stanley Kowalski in the swamp.”

Later, walking through the French Quarter with a drink in one hand and a snack in the other, between ornate balconies overhanging the sidewalks, surfeited but tempted by the good smells all around. New Orleans had more well-known chefs

than any other city its size, and more good places to eat. Now even famed television celebrity chef Emeril Lagasse’s three New Orleans restaurants are closed, his web site turned into an online contact point for refugee employees widely scattered in the New Orleans diaspora.

Lost and Scared

My memory is muddy, What’s this river that I’m in? New Orleans is sinking And I don’t wanna swim

—The Tragically Hip, “New Orleans Is Sinking”

I remember checking into a French Quarter hotel on a hidden courtyard down some anonymous alley. Dinner that night in a restaurant so tucked away on an alley/ courtyard that I don’t know how we even found it. The live music may have led us there, although live music is like air in the French Quarter. The next day finding in a funky little curio shop a cleverly framed Picasso print that we had to have. Hidden treasures.

Some of these memories are from that camping trip, others from OOPSLA. They run together like they’d been left out in the rain. This memory is definitely from the camping trip, though:

That summer, we had come crosscountry in our camper van, arriving in New Orleans late in the evening. Looking for a campground in a black downpour, we ended up we-had-no-idea-where outside of town next to some body of water. Lake Pontchartrain? The Mississippi River? It was too dark and rainy to tell and the people running the place spoke a dialect of English we didn’t understand. Parked in our van a few dozen feet horizontally and maybe two feet vertically from the troubled surface of the dark water, thunder booming around us and the water rising, we didn’t get much sleep. In the morning, we realized that we had never been in any real danger.

But the memory of that night comes back to me vividly these days as I think about the catastrophe of New Orleans 2005.

OOPSLA ‘89

I added up the preregistration and on-site registration lists, and came up with 1626 registered attendees of OOPSLA ’89. You may have to just take my word for it that

76 |

Dr. Dobb’s Journal, December 2005 |

http://www.ddj.com |

this was a lot of people for an objectoriented programming conference back then. It was easy to conclude, I concluded, that there was an object-oriented programming revolution underway.

Today, the object-oriented paradigm is taken for granted, and proponents of newer approaches like Aspect-Oriented Programming position their paradigm against it, like a candidate running against an incumbent. But in 1989, OOP was the coming thing. I thought it might be interesting to look back at some of the highlights of that conference at the New Orleans Hyatt Regency in the first week of October 1989.

The exhibit floor showed which companies considered OOP important enough to rent booth space: Digitalk, The Whitewater Group, and Interactive Software Engineering had prominent placement, as did Apple and Borland. On the other hand, Microsoft didn’t exhibit, although they had plenty of employees at the conference.

The conference itself was chaired by Kent Beck, currently a neighbor of mine here in Southern Oregon. Kent was with Apple then, and he and Ward Cunningham gave a talk on the teaching of OOP. Before the regular sessions there were two days of tutorials on what were considered the most important OOP environments, including Smalltalk, C++, NextStep, and a portable C++ class library for UNIX called ET++.

The keynote address was by Peter Wegner, who wrote the first book on Ada and got into OOP because of his perception of the deficiencies of Ada for software engineering. He left little doubt that he considered software engineering to be the proper goal for OOP. Wegner’s latest book, out this year from Springer-Verlag, is called Interactive Computation: The New Paradigm.

The ’89 conference marked a new stage of maturity for OOP: Kent Beck explained that, while previous OOPSLA conferences had included sessions on such related topics as general software engineering, user interface design, and databases, this year’s conference would focus more tightly on OOP theory, OOP language design and implementation, and concurrency.

Class Warfare

There’s a perpetual problem for those who would define and name paradigms in technology: As soon as you assign two or more attributes to a paradigm, someone will come up with an approach that includes all but one of the attributes. It was clear at that ’89 conference that there would be no precise definition of OOP that would encompass all the interesting work going on in the area. The SELF language, the subject of one session, is a good example. Can a language that has objects and inheritance but that lacks classes qualify as object oriented?

Software engineering and the tantalizing vision of interchangeable software parts was the subject of a number of sessions. Brad Cox, who had written a new object-oriented version of C called “Objective-C,” led a discussion on what was optimistically called the “Software Engineering Revolution.” Brad’s still on the ramparts in that revolution, but he mostly programs these days in Java, Ruby, Python, and Perl, rather than his own language.

A number of sessions described progress in real-world applications of OOP. I don’t know if you’d consider Star Wars, also known as the Strategic Defense Initiative, real-world, but other presentations addressed the application of OOP to practical issues in commercial software development, CAE/CAD, and scientific computing. The scientific computing papers suggested that most people working on OOP in this area were using either C++, Smalltalk, or CLOS, the Common Lisp Object System. These scientific sessions made it glaringly clear that the ability to construct 3D computer models of objects and processes and to manipulate the models had by then become an essential tool of scientific research.

It was also clear that OOP solutions had some real challenges in efficiency and performance, as indicated by the fact that many systems dropped out to plain C code in critical sections, and by the sessions on the search for ways to speed-up garbage collection.

Sun was there, too, of course. These days, Scott McNealy is Sun Microsystems’ point man for Infuriating People and Insulting Competitors. But in New Orleans that fall, it was Bill Joy saying that he didn’t consider traditional data-processing professionals a market for OOP because they wouldn’t understand it — and adding that, to be fair, he’d say the same about most

Cprogrammers.

Some of the chief issues pertaining to

pushing the state of the art that came up at the conference were persistent objects, parallelism, agents, and reflection. There are many kinds of software agents, but one particular kind was on people’s minds at the conference. Several talks dealt with developing agents to represent the user— or programmer or maybe the program — in searching for objects in some future world of sharable, reusable software components spread among widely distributed systems. Still something of a dream, but a little closer to reality today, maybe.

During one of the panel discussions, C++ creator Bjarne Stroustrup said that anyone claiming that object-oriented programming will bring about bug-free software was probably spending evenings in

United States Postal Service

Statement of Ownership, Management, and Circulation

1.Publication title: Dr. Dobb’s Journal

2.Publication number: 1 0 4 4 ---- 7 8 9 X

3.Filing date: 10/1/05

4.Issue frequency: Monthly

5.Number of issues published annually: 12

6.Annual subscription price: $34.95

7.Complete mailing address of known office of publication: CMP Media LLC, 600 Harrison Street, San Francisco, CA 94107

8.Complete mailing address of headquarters or general business office of publisher: CMP Media LLC, 2800 Campus Drive, San Mateo, CA 94403

9.Full names and complete mailing addresses of publisher, editor, and managing editor: Publisher Michael Goodman, CMP Media LLC, 2800 Campus Drive, San Mateo, CA 94403; Edi- tor-in-Chief Jonathan Erickson, CMP Media LLC, 2800 Campus Drive, San Mateo, CA 94403; Managing Editor Deirdre Blake; CMP Media LLC, 2800 Campus Drive, San Mateo, CA 94403

10.Owner, full name: CMP Media LLC. Complete mailing address: 600 Community Drive, Manhasset, NY 11030-3847. An indirect, wholly owned Subsidiary of United Business Media plc, Ludgate House, 245 Blackfriars Road, London, SE1 9UY UNITED KINGDOM

11.Known bondholders, mortgages, and other security holders owning or holding 1 percent or more of total amount of bonds, mortgages, or other securities: None

12.Not non profit: N/A

13.Publication title: Dr. Dobb’s Journal

14.Issue date for circulation data below: Average from July 2004 to June 2005 and October 2005 issue

15.Extent and nature of circulation:

a.Total number of copies (net press run) Average number of copies each issue during preceding 12 months: 145,188. Actual number of copies of single issue published nearest to filing date: 142,924.

b.Paid and/or requested circulation. (1) Paid/requested out- side-county mail subscriptions stated on form 3541. Average number of copies each issue during preceding 12 months: 108,538. Actual number of copies of single issue published nearest to filing date: 114,186. (2) Paid in-coun- ty subscriptions stated on form 3541. Average number of copies each issue during preceding 12 months: 0. Actual number of copies of single issue published nearest to filing date: 0. (3) Sales through dealers and carriers, street vendors, counter sales, and other non-USPS paid distribution. Average number of copies each issue during preceding 12 months:14,342. Actual number of copies of single issue published nearest to filing date: 11,497. (4) Other classes mailed through the USPS. Average number of copies each issue during preceding 12 months: 0. Actual number of copies of single issue published nearest to filing date: 0.

c.Total Paid and/or Requested Circulation [Sum of 15b. (1), (2), (3), (4)]. Average number of copies each issue during preceding 12 months: 122,880. Actual number of copies of single issue published nearest to filing date: 125,683.

d.Free distribution by mail (samples, complimentary, and other free): (1) Outside-County as Stated on Form 3541. Average number of copies each issue during preceding 12 months: 0. Actual number of copies of single issue published nearest to filing date: 0. (2) In-County as Stated on Form 3541. Average number of copies each issue during preceding 12 months: 0. Actual number of copies of single issue published nearest to filing date: 0. (3) Other Classes Mailed Through the USPS. Average number of copies each issue during preceding 12 months: 0. Actual number of copies of single issue published nearest to filing date: 0.

e.Free distribution outside the mail (carriers or other means). Average number of copies each issue during preceding 12 months: 2,510. Actual number of copies of single issue published nearest to filing date: 2,872.

f.Total free distribution (Sum of 15d and 15e). Average number of copies each issue during preceding 12 months: 2,510. Actual number of copies of single issue published nearest to filing date: 2,872.

g.Total distribution (Sum of 15c and 15f). Average number of copies each issue during preceding 12 months: 125,390. Actual number of copies of single issue published nearest to filing date: 128,555.

h.Copies not distributed. Average number of copies each issue during preceding 12 months: 19,799. Actual number of copies of single issue published nearest to filing date: 14,369.

i.Total (Sum of 15g and 15h). Average number of copies each issue during preceding 12 months: 145,189. Actual number of copies of single issue published nearest to filing date: 142,924.

j.Percent paid and/or requested circulation. (15c divided by 15g times 100). Average number of copies each issue during preceding 12 months: 98%. Actual number of copies of single issue published nearest to filing date: 98%.

16. Publication on statement of ownership. Publication required. Will be printed in the December 2005 issue of this publication.

I certify that all information furnished on this form is true and complete.

Michael Goodman, Publisher

http://www.ddj.com |

Dr. Dobb’s Journal, December 2005 |

77 |

the French Quarter guessing where people got their shoes.

I guess he ran into that guy, too.

Katrina Mashups

The online community— and this is one of those cases where that expression is not an

oxymoron — responded quickly and in many ways to hurricane Katrina. Among those responses were some creative applications of map-based mashups, including visual plots of Craigslist refugee housing locations and maps showing information about affected locations in New Orleans.

Links to blog topics are often ephemeral, but http://googlemapsmania.blogspot.com/ is at least a starting point for reading about mashups and for tracking down some of these clever and useful hacks.

DDJ

Solution to “Feedback Strategies,” |

Dr. Ecco Solution |

ability Pgood you will be one column |

|

DDJ, November 2005 |

closer to your goal and with proba- |

1. The probability of winning under |

bility 1–Pgood you will be one farther |

FeedYes is about 0.89 when Pgood is |

from the goal both with n–1 steps. |

0.9. It cannot be better than 0.9 be- |

Under the FeedNo strategy, the prob- |

cause if one is on the second from top |

ability is about 0.55. To compute the |

row and is adjacent to the winning |

probability for FeedNo, assume a strat- |

square, there is some chance of los- |

egy that will take four aims to the right |

ing. The strategy for FeedYes consists |

and three aims to the left since this |

of trying to reduce the horizontal dis- |

gives the best likelihood of hitting the |

tance to the goal to zero (or to one |

destination. Given this, there are four |

on rows that are an odd distance |

ways to win: All seven aims are true; |

away). Calculating the probability in- |

three of the right aims are true and two |

volves the following key recurrence |

of the left are; two of the right are true |

ideas: If you are n steps (n>0) away |

and one of the left; and one right and |

from the top row and 0 distance away, |

zero left. So, the feedback dividend is |

then your probability of hitting is the |

about 1.6. |

same as the probability of hitting if |

2. The value of Pgood, for which the div- |

you are n–1 steps away and one away |

idend ratio is highest, is not 0.75 as one |

from column 5. Otherwise, with prob- |

might think but something around 0.772. |

For that value, the dividend ratio reaches 1.99. I don’t believe it ever reaches 2.

3.In both the FeedNo and FeedYes cases, the analysis combines short trips: One wants to go from row 1 column 4 to row 3 column 4 in the first two moves then to row 5 column 4 in the second two moves, then row 7 column 4, and finally row 8 column 5. For FeedNo, this gives a formula like the following:

((((Pgood2)+(1–Pgood )2))3)×Pgood

For FeedYes, this gives a formula that is Pgood4. This gives a maximum feedback dividend of 1.75 when Pgood is 0.71.

Alan Dragoo helped with these solutions.

DDJ

Dr. Dobb’s Journal “Wit” T-Shirt Contest

Dr

with

If

your

your

By full

And the

winners are...

Birth |

Quick. |

|

School |

Accurate. |

|

Inexpensive. |

||

<code> |

||

Pick two |

||

Death |

||

Nancy Terwilliger |

||

|

Because Dr. Dobb's said so.

Stephen Craver

full details at www.ddj.com/contest.html/

hilarious t-shirts and/or bumper stickers for

-

-

among

Journal

(except

78 |

Dr. Dobb’s Journal, December 2005 |

http://www.ddj.com |